Trace Datasets for Agentic AI: Structuring and Optimizing Traces for Automated Agent Evaluation

Trace datasets reveal how AI agents behave and enable automated agentic AI evaluation for reliability, safety, and compliance.

Collection, Annotation, Classification, Transcription, and Model Development

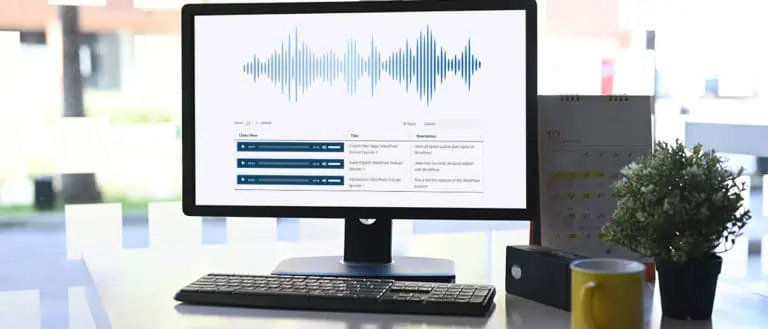

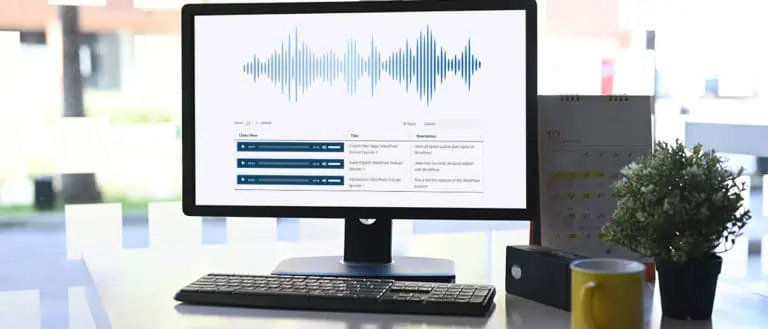

With Innodata’s full suite of audio and speech data collection services, you can scale your AI models and ensure model flexibility with high-quality and diverse data in multiple languages, dialects, demographics, speaker traits, dialogue types, environments, and scenarios. Let Innodata’s global network of 4,000+ experts, including native speakers of 40+ languages, capture the samples you need for any initiative.

From field-recorded audio (like in-home, restaurants, and gyms) to in-studio recordings, our diverse situational audio and speech data can serve any use case.

With our network of global subject matter experts and in-country native-speaking teams, we can provide multi-scenario and actor-based scenario recordings for your initiatives in 40+ languages.

Innodata can collect audio and speech data with diverse cultural, demographic (like gender and age), sentiment, intent, and linguistic characteristics.

Access to multiple speech dialogue types, like one-speaker (monologue), dual-speaker, or multi-speaker conversations.

Providing a wide range of recording device scenarios for any AI initiative, including audio recorded on hand-held tech, telephones, speakers, or computers.

Innodata can provide speech and audio data within any project prerequisite, such as overall sample size, the number of utterances per speaker, scripted vs. spontaneous speech, and natural environments vs. staged scenarios.

Collection, Annotation, Classification, Transcription, and Model Development

With Innodata’s full suite of audio and speech data annotation services, you can scale your AI models and ensure model flexibility with high-quality annotated data. Leverage Innodata’s deep annotation expertise to streamline audio annotation, classification, and transcription using natural language processing (NLP) and human experts-in-the-loop.

Innodata can partition speech and audio files according to any model-training need, like segmenting different speakers, labeling stop and start times, and tagging speech vs. background noise, music, and silence.

Our human experts-in-the-loop and deep NLP expertise can provide industry-leading transcriptions for any verbatim or non-verbatim initiative, saving you time, labor, and cost.

Innodata can annotate audio sentiment and intent, like speech intensity, context, word rate, pitch, changes in pitch, and stress — for use in initiatives like customer experience needs, call center dialogues, estimating customers' opinions, and monitoring product or brand reputation.

Similar to our world-class speech and audio data collection trait variabilities, we can label traits like languages, dialects, accents, and demographics (like gender and age) within audio files.

Innodata can provide speech and audio data annotation within any project prerequisite, including transcription requirements, annotation requirements, delivery method, and delivery schedule.

In addition to our audio and speech annotation offerings, our global subject matter experts can classify files into broader pre-established categories, like recording quality, amount of background noise, speaker intents, music vs. no music, conversational topics, speaker languages and dialects, the number of speakers, and more.

Scale your virtual assistants, ASR or text-to-speech models, conversational AI, wearables, and other NLP initiatives with Innodata’s end-to-end services.

Whether you use our collected or annotated data, or need help utilizing your existing data to deploy or develop speech and audio AI/ML models, Innodata can help you expedite time-to-market. Utilize our world-class subject matter experts to build, train, and deploy models, augment your team, prevent model drift, and scale your models and operations faster.

Innodata can build, train, and deploy customized audio and speech AI and ML models to support your use-case and specifications built on your desired framework.

When you need to scale your team or deploy a one-off initiative, we have the resources to help. Use Innodata’s experts to avoid hiring, training, and developing staff internally.

We can help identify issues in data quality, integrity problems, demographic shifts, and changes in workforce bias/behavior. We then utilize various learning types, periodic retraining with new high-quality data, and the introduction of weighted data to get the confidence scores you need.

Trace datasets reveal how AI agents behave and enable automated agentic AI evaluation for reliability, safety, and compliance.

How kinematics-based motion analysis improves data labeling, automated quality control, and computer vision models for fitness and robotics.

AI evaluation helps enterprises ensure accuracy, fairness, security, and trust. Learn the seven core components needed to keep AI reliable over time.

You’re So Close to End-to-End Audio and Speech Data Services

It Takes Less Than 30 Seconds to Inquire

Expedite Your Process Without Sacrificing Quality So Your Team Can Focus on Innovation