Accelerating AI with Data Annotation

We’re still a long way from realizing the full potential of artificial intelligence. Sorry to burst your bubble, but self-driving cars taking over the roads and robot doctors are closer to science-fiction than reality. Despite the hype around these AI-powered initiatives, the harsh truth is we still do not have enough data to speed the advancement of many of these types of AI projects. And while everyone understands that AI requires vast amounts of “big data” to continually learn and identify patterns that humans can’t, it’s really about getting “smart data” to train machine learning models. After all, artificial intelligence is only as smart as the data its fed.

While it’s not always easy to turn raw data into smart data, there is one process that helps add vital bits of information to raw data – providing structure to data that is otherwise just noise to a supervised learning algorithm – data annotation.

What is Data Annotation?

Data annotation (commonly referred to as data labeling) plays a crucial role in ensuring your AI and machine learning projects are trained with the right information to learn from. Data annotation and labeling provides the initial setup for supplying a machine learning model with what it needs to understand and discriminate against various inputs to come up with accurate outputs. By frequently feeding tagged and annotated datasets through an algorithm, you’re able to establish a model that can begin getting smarter over time. The more annotated data you use to train the model, the smarter it becomes. But you can’t do it without a little help. Producing the necessary annotation from any asset at scale is a challenge for many – mainly due to the complexity involved with annotation. Getting the most accurate labels demands time and expertise.

Humans are needed to identify and annotate specific data so machines can learn to identify and classify information. Without these labels – tagged and validated by human assistance – a machine learning algorithm will have a difficult time computing the necessary attributes. When it comes to annotation, machines can’t function without humans-in-the-loop.

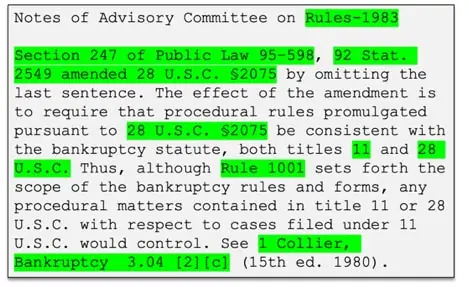

For example, a court may issue a decision containing mostly unstructured data. Making any sense of this information demands the expertise of legal professionals to review and provide structure as well as context to the information, such as tagging specific clauses and citing other cases relevant to the judgement being scrutinized. This process of extraction and tagging helps provide a machine learning algorithm with bits of information it could not acquire on its own.

Ultimately, artificial intelligence can’t succeed without access to the right data. Feeding it the right information with a learnable ‘signal’ consistently added at a massive scale is going to drive constant improvement over time. That’s the power of data annotation. However, before you begin with any data annotation project, it’s important to consider the following questions.

What do you need to annotate?

There are many different types of annotations, depending on what kind of form the data is in. It can range from image and video annotation, text categorization, semantic annotation, and content categorization. What is most important to achieving your particular business goals? Will one form of data help accelerate a particular project more than another? Determine what you will need to be successful first and foremost.

Is your annotation accurately representative of a particular domain?

Before you start labeling data, you should understand the domain vocabulary, format and category of the data you intend to use – also known as building an ontology. Ontologies play a critical role in machine learning. According to the Wikipedia definition, ontologies are “formal naming and definition of the types, properties, and interrelationships of the entities that really or fundamentally exist for a particular domain of discourse.” In other words, ontologies give meaning to things. Think of this as teaching your AI to communicate using a common language. It is critical to identify the problem statement and understand how AI can interpret data to semantically solve a certain use case.

How much data do you need for your ML/AI project?

The likely answer is as much data as possible, but in some instances certain benchmarks can be established based on the specific need (e.g. the past 10 years of SEC regulatory data). This is likely determined by having a domain expert handle the annotations and continually evaluate accuracy that will help create the ground truth data that will be used to train your algorithm.

Should you outsource or annotate in-house?

According to research from Cognilytica, companies spend five times as much on internal data labeling than they do with 3rd parties. Not only is this costly, it is time intensive; taking valuable time away from your team when they could be focusing their talents elsewhere. What’s more, building the necessary annotation tools often require more work than some ML projects. But for many companies, security is an issue, so there is often hesitation to release data. But many companies have privacy and security procedures in place to address these concerns.

Do you need your annotators to be subject matter experts?

Depending on the complexity of the data you are annotating, it is vital to have the right expert handle annotations. While several companies use the crowd for basic annotations, more complex data requires specialized skills to ensure accuracy. For example, being able to interpret complex legal obligations and agreements from ISDA contracts require legal specialists that can identify and label the most appropriate information. The same goes for other fields like science and medicine where deep understanding and fluency of the content cannot be taken for granted. If there are even slight errors in the data or in the training sets used to create predictive models, the consequences can be potentially catastrophic.

Mike Goldberg

Michael Goldberg enjoys learning and reading about cutting-edge technology, data and AI. When afforded the opportunity, he's more than happy to share his thoughts on what it means for us - professionally and personally.

Bring Intelligence to Your Enterprise Processes with Generative AI.

Follow Us