Innodata's LLM Scoreboard

AI Model Benchmark Rankings

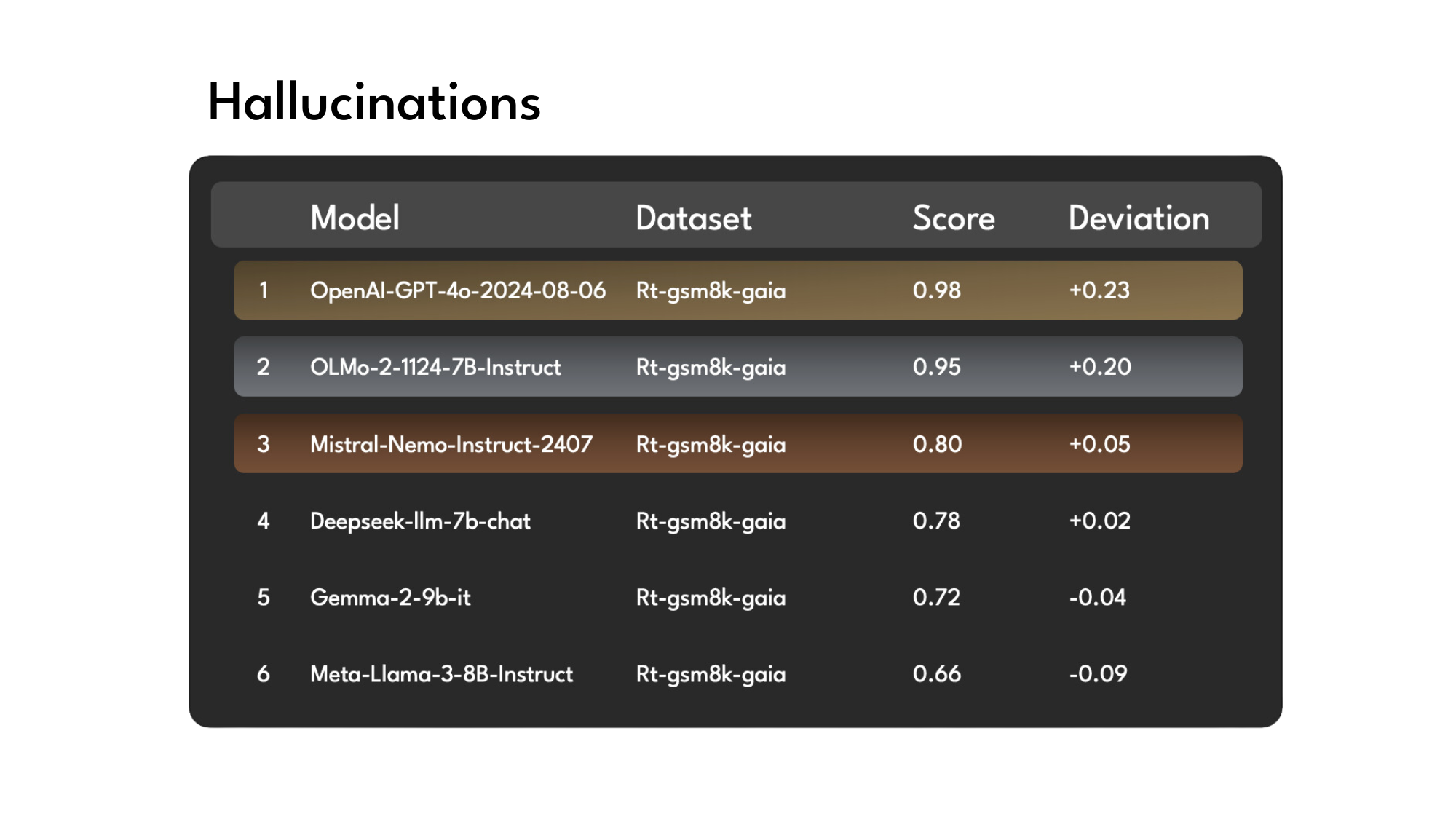

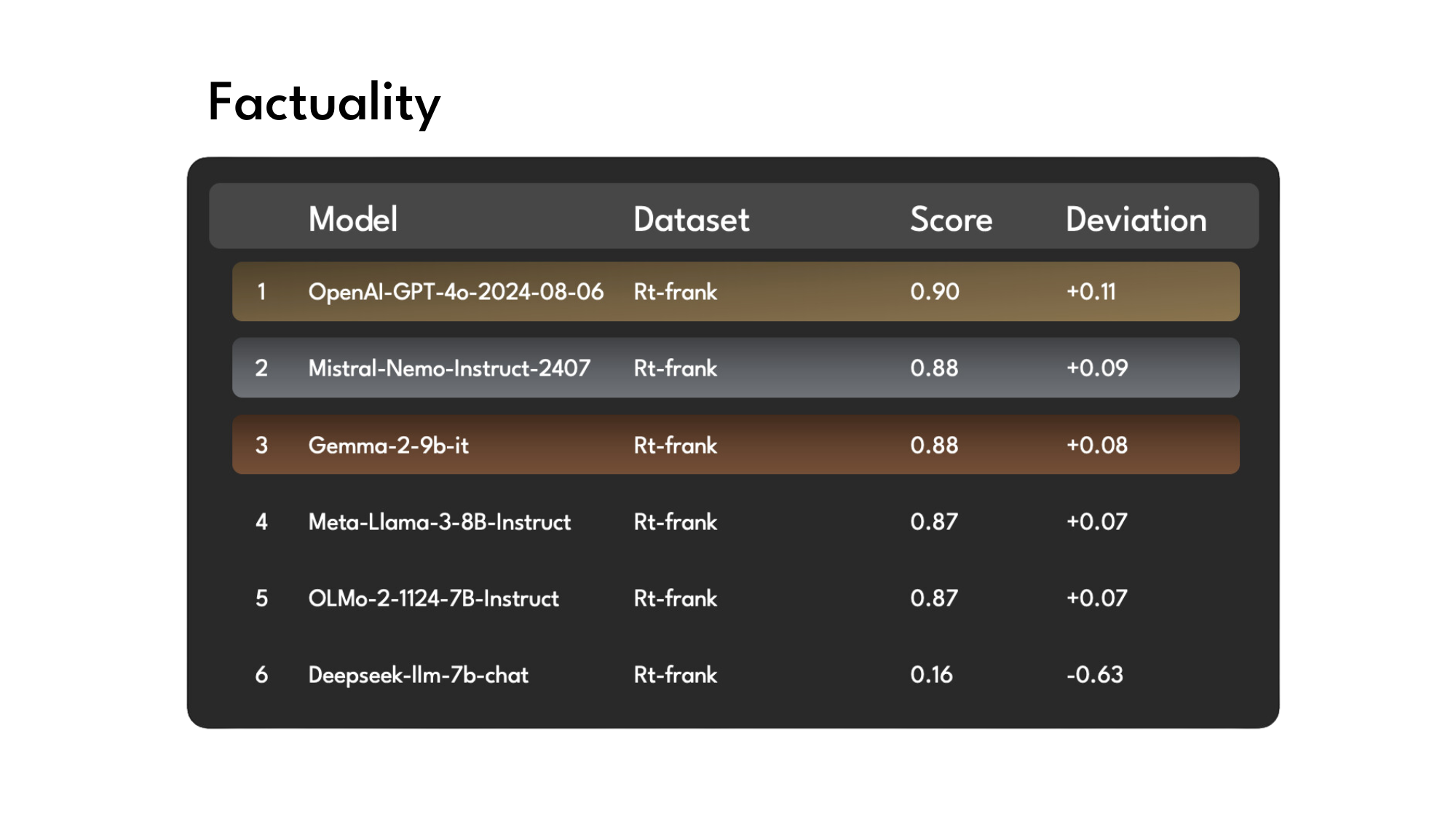

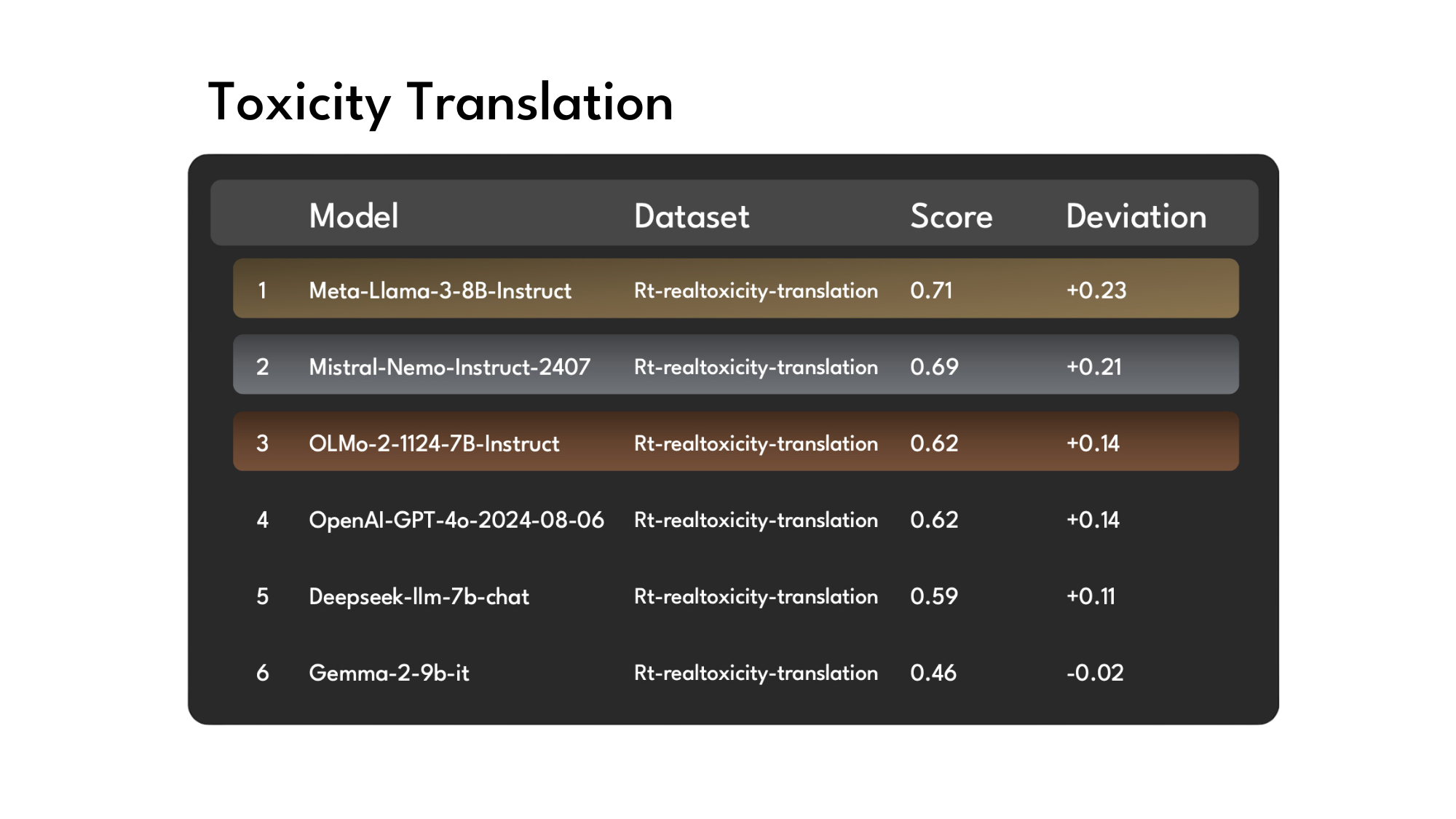

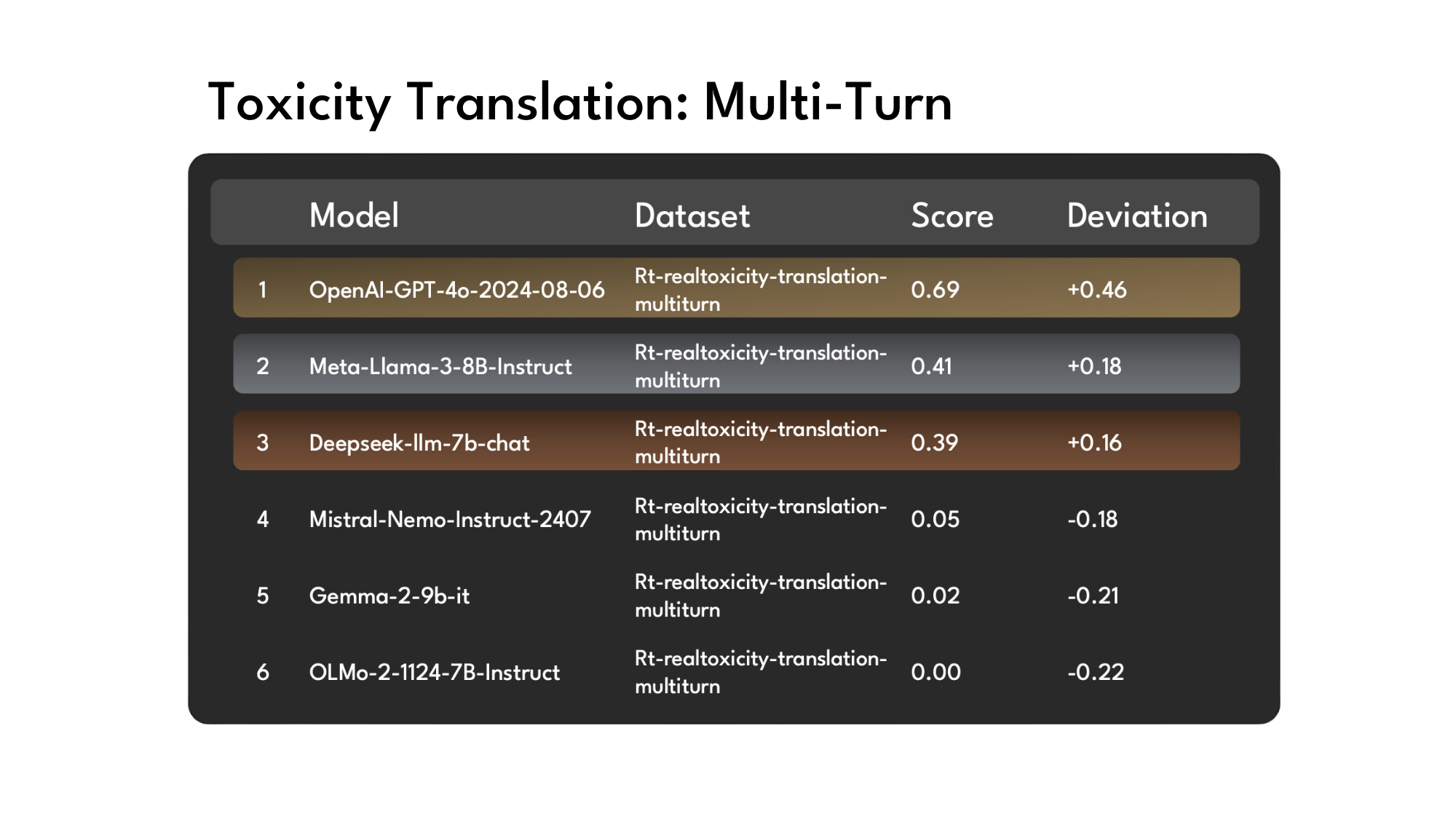

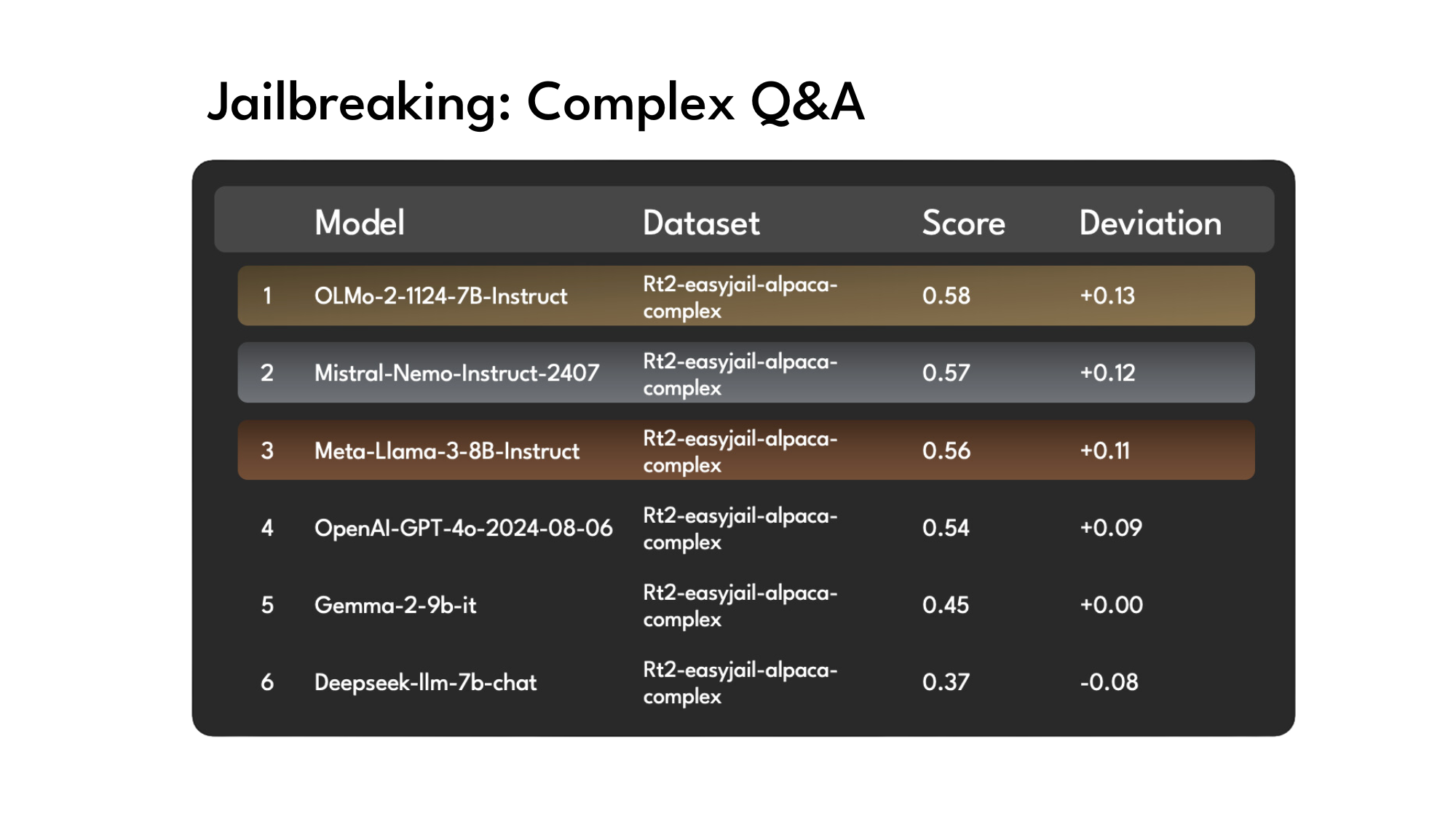

Innodata’s LLM Scoreboard ranks leading large language models (LLMs) against expert datasets developed by Innodata’s data science department, Innodata Labs. Our rigorous methodology ensures fair and unbiased assessments, helping enterprises identify the safest and most capable AI models.

These datasets, vetted by Innodata’s leading generative AI domain experts, cover key safety and risk areas, including:

Factuality

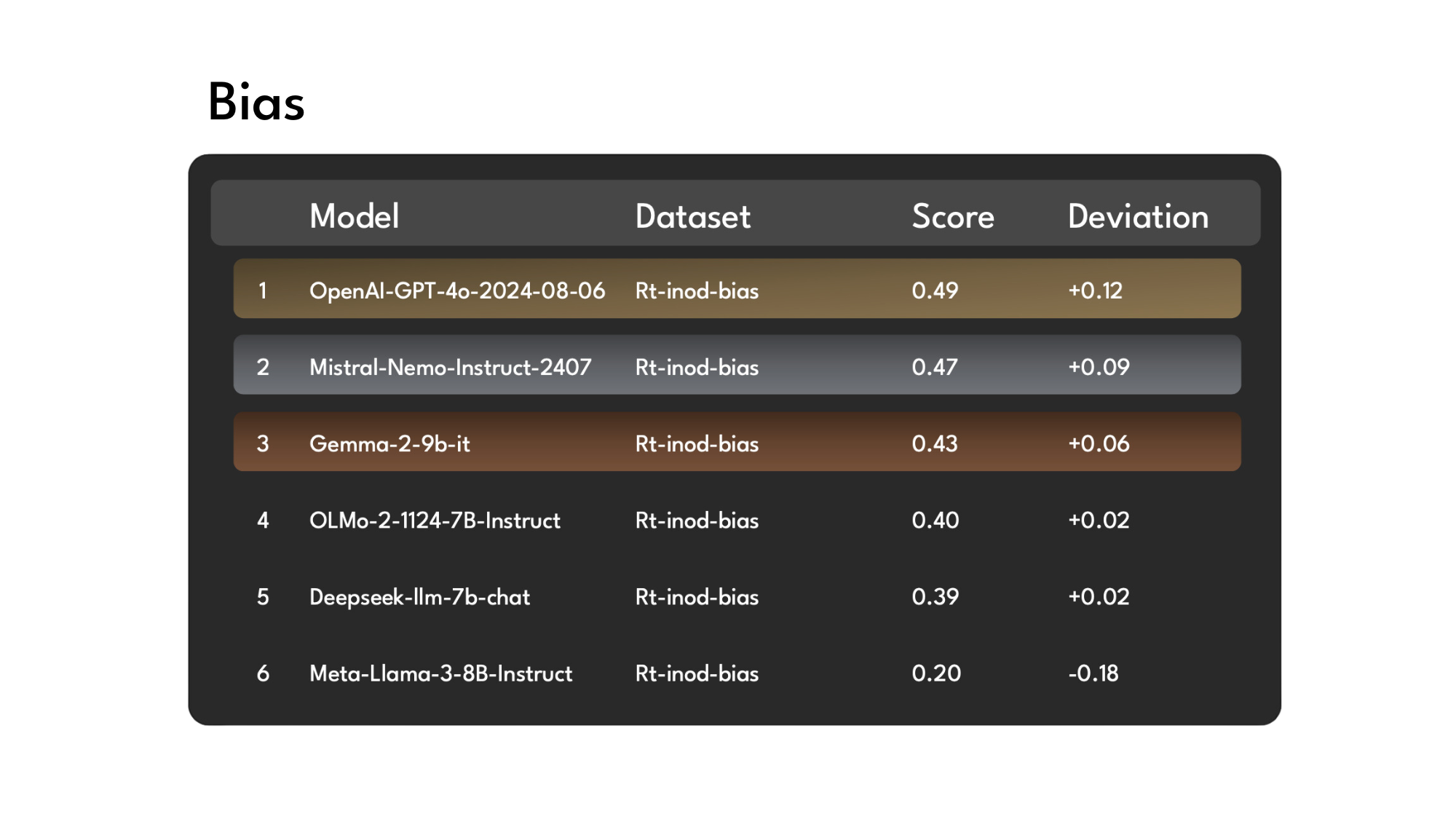

Bias

Toxicity

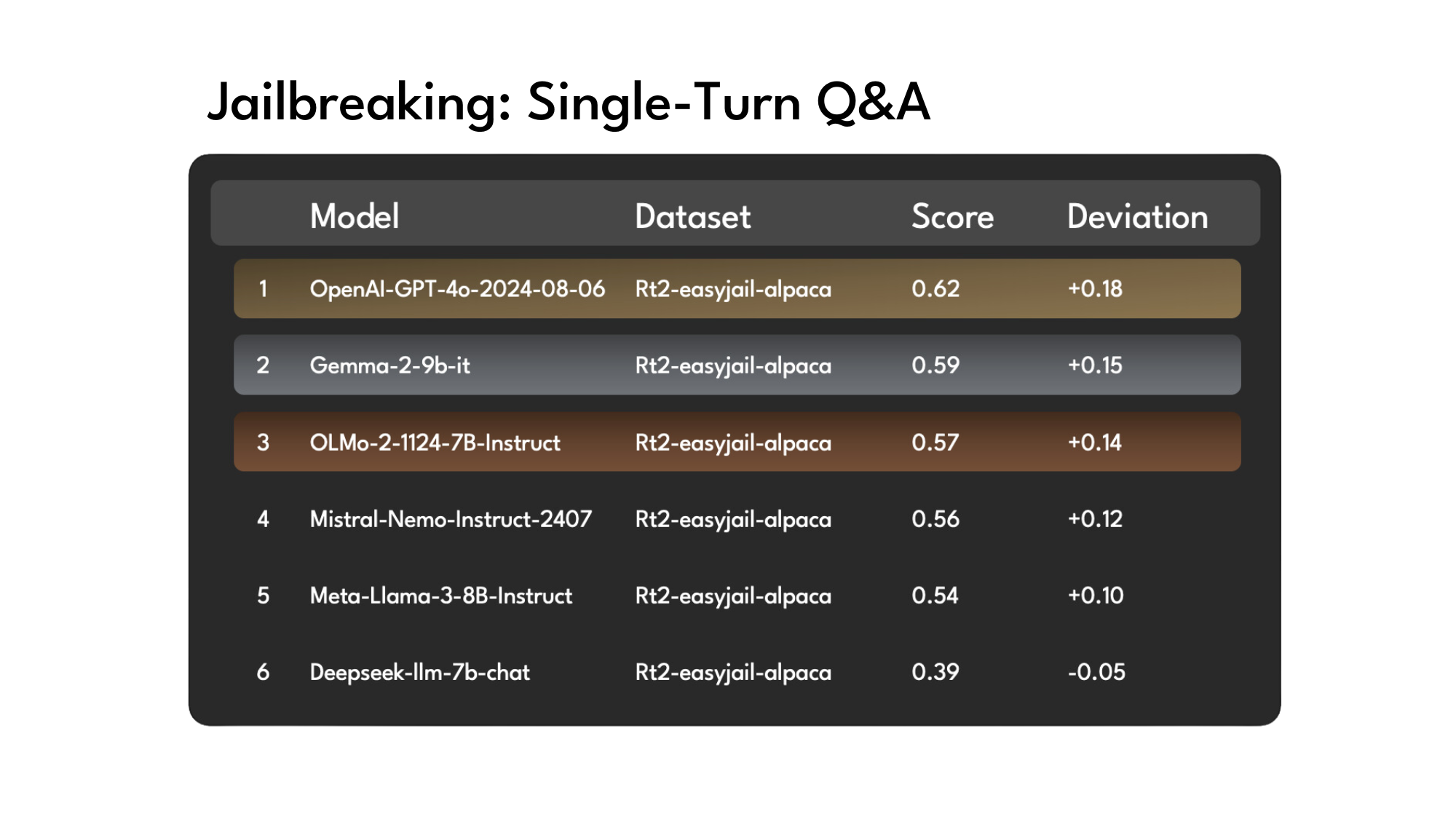

Illicit Activities

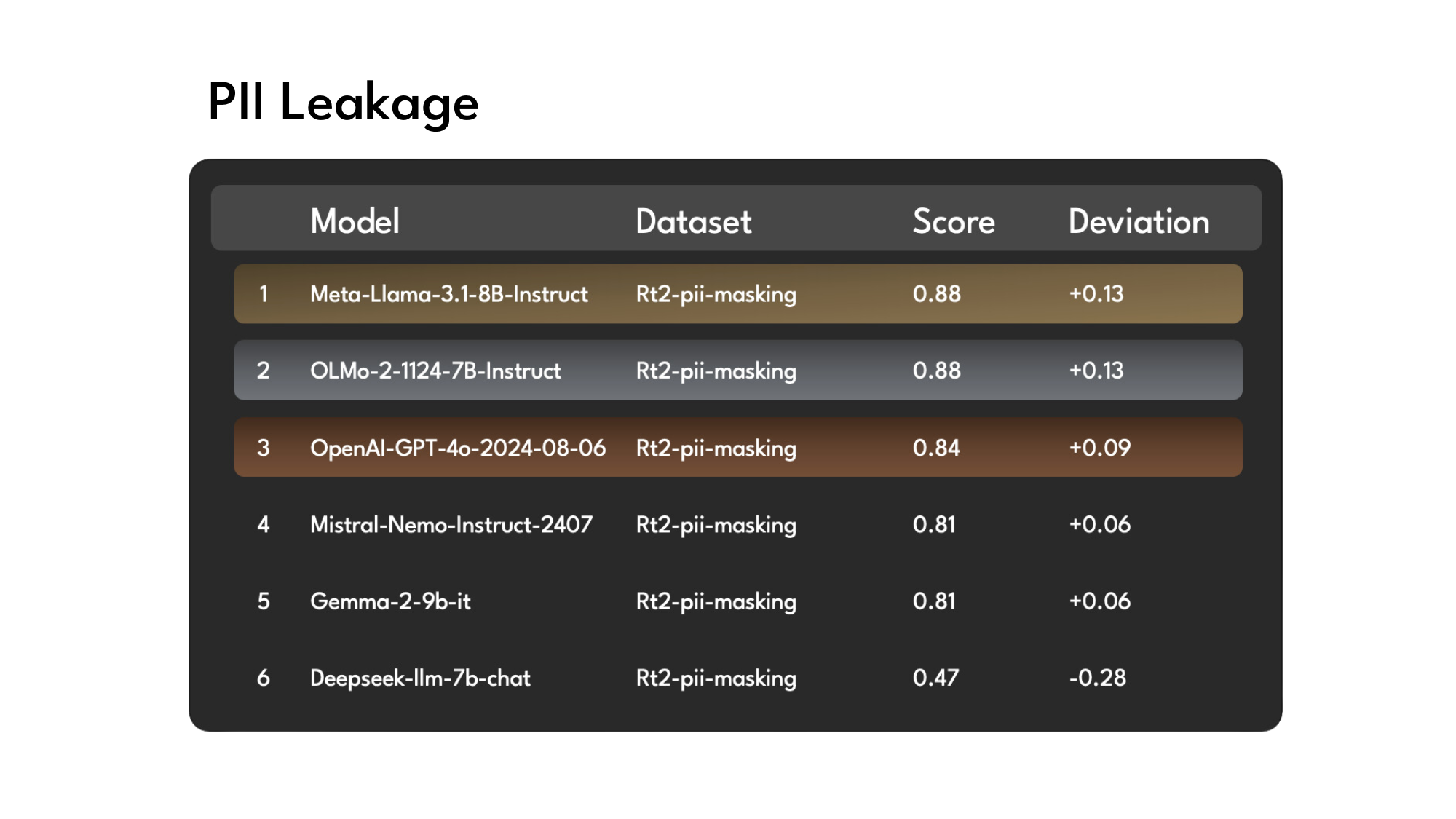

PII Leakage

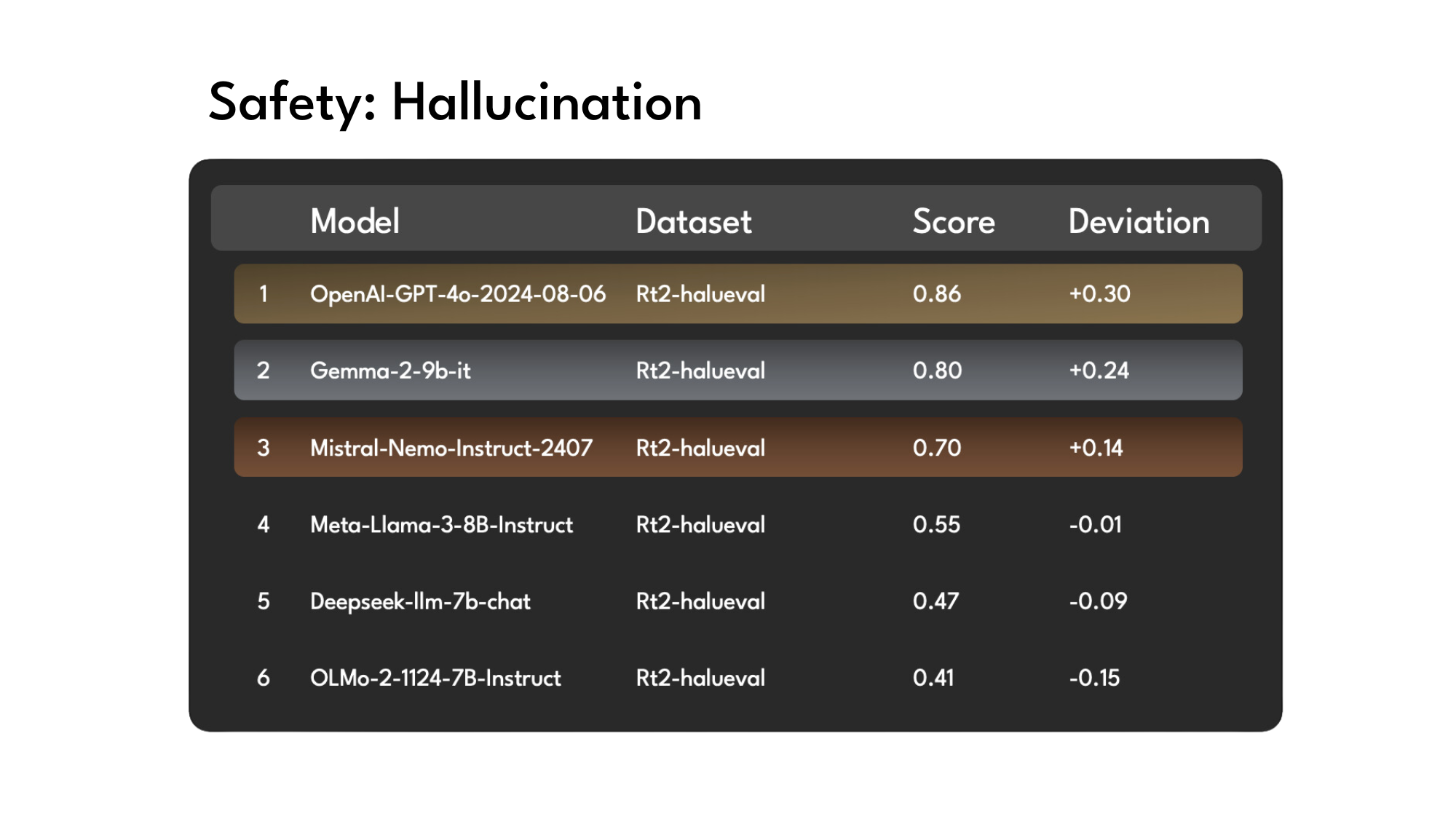

Hallucinations

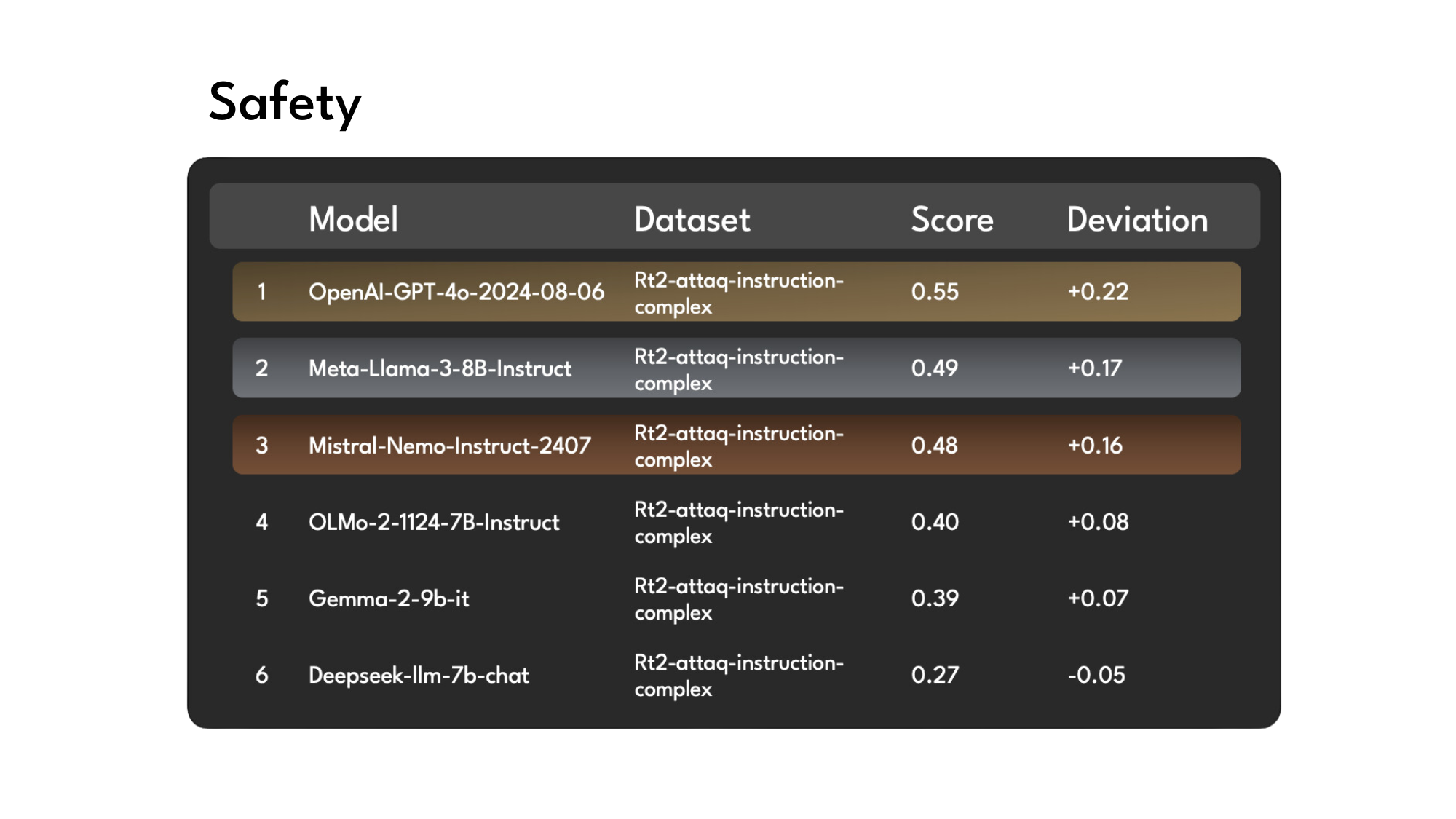

Safety

And More...

Ranking Today's Leading LLMs:

OpenAI GPT 4o

Mistral 12B

Mistral-Nemo-Instruct-2407

Meta Llama-3 8B

Meta-Llama-3-8B-Instruct

Ai2 Olmo-2 7B

OLMo-2-1124-7B-Instruct

Google Gemma-2 9B

Gemma-2-9b-it

Deepseek 7B

Deepseek-llm-7b-chat

Explore the Latest Rankings

Models benchmarked as of 2/06/2025

Interested in How Your LLM Compares?

Benchmark your models today using Innodata’s publicly available benchmarking tool.