Generative AI

Data Solutions

Trusted Data Solutions for Powerful Generative AI Model Development

Let's Innovate Together

Bring 35+ years of data expertise to your AI initiatives with Innodata. Seven of the world’s largest tech companies trust Innodata for their AI needs — let’s make you the next success story.

Fuel Advanced AI/ML Model Development With Data Solutions for Generative AI

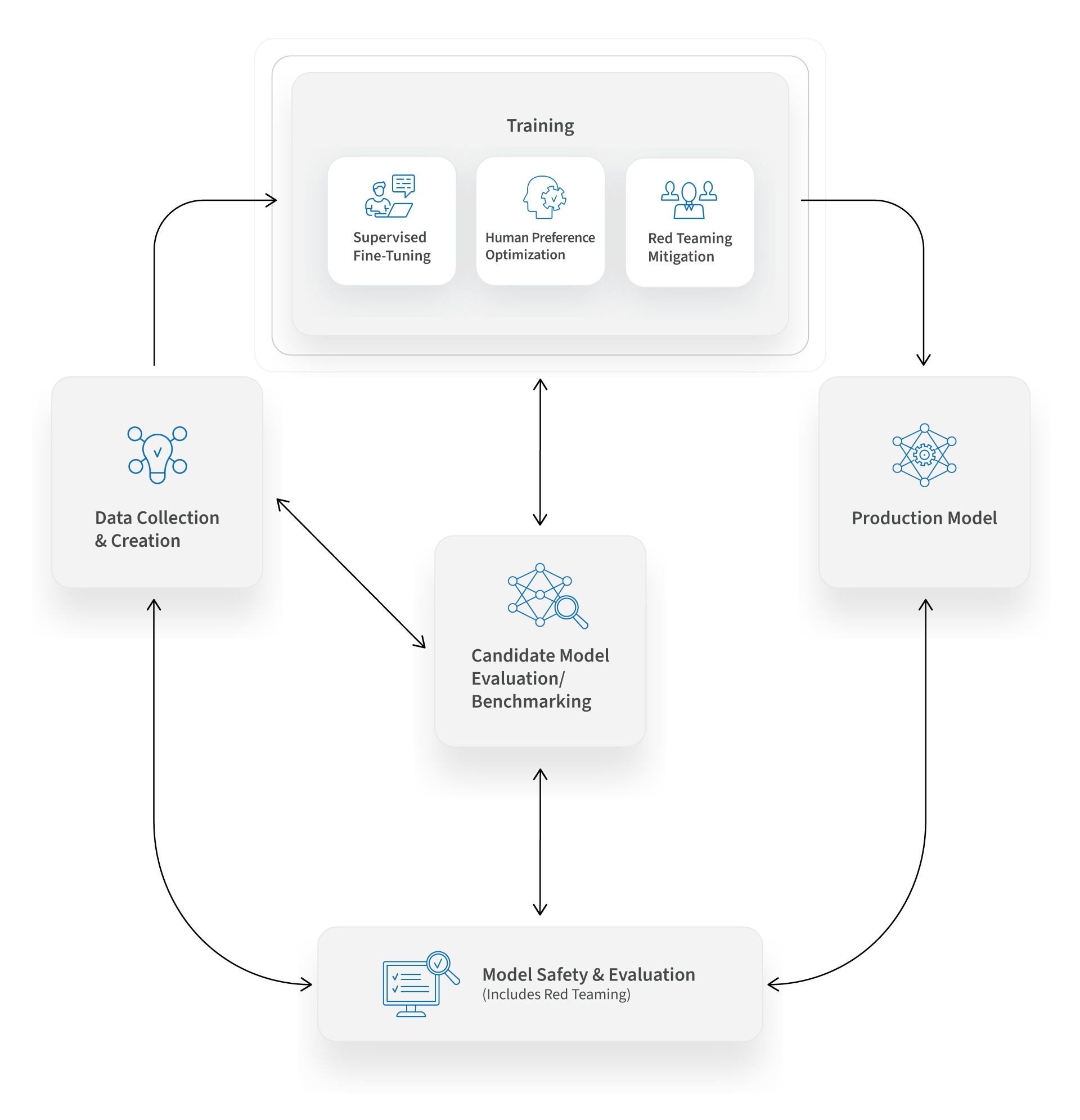

High-quality data solutions for developing industry-leading generative AI models, including diverse golden datasets, fine-tuning data, human preference optimization, red teaming, model safety, and evaluation.

Data Collection & Creation

Our global teams rapidly collect or create realistic and diverse training datasets tailored to your unique use case requirements to enrich the training of generative AI models.

Additionally, develop LLM prompts with high-quality prompt engineering, allowing in-house experts to design and create prompt data that guide models in generating precise outputs.

Supervised Fine-Tuning

Linguists, taxonomists, and subject matter experts across 85+ languages of native speakers create datasets ranging from simple to highly complex for fine-tuning across an extensive range of task categories and sub-tasks (90+ and growing).

Human Preference Optimization

Improve hallucinations and edge-cases with ongoing feedback to achieve optimal model performance through methods like RLHF (Reinforcement Learning from Human Feedback) and DPO (Direct Policy Optimization).

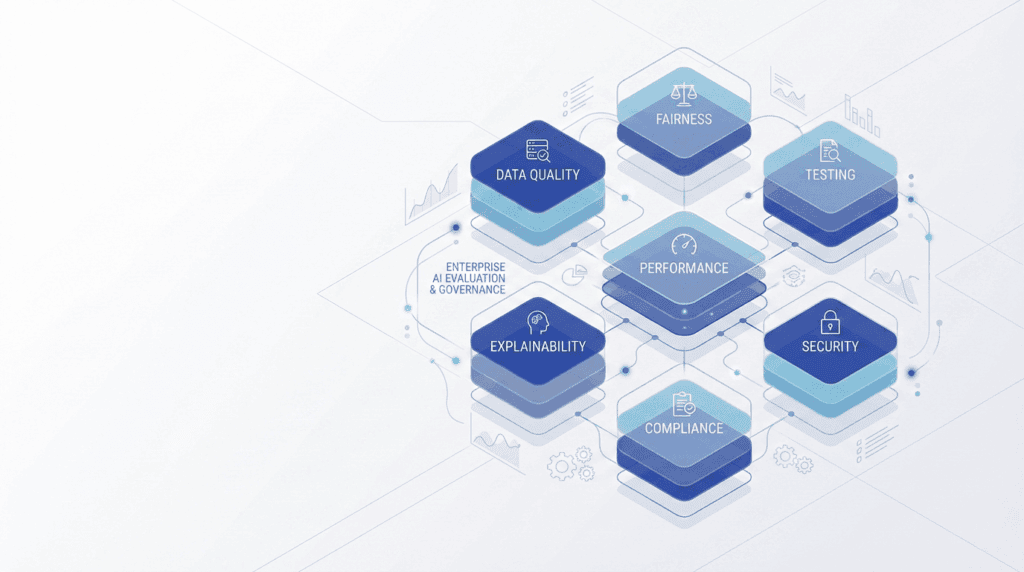

Model Safety, Evaluation, & Red Teaming

Address vulnerabilities with Innodata’s red teaming experts. Rigorously test and optimize generative AI models to ensure safety and compliance, exposing model weaknesses and improving responses to real-world threats.

Data Collection & Creation

Naturally curate or synthetically generate a wide range of high-quality datasets across data types and demographic categories in over 85 native languages.

Our global teams rapidly collect or create realistic and diverse training datasets tailored to your unique use case requirements to enrich the training of generative AI models.

Additionally, develop LLM prompts with high-quality prompt engineering, allowing in-house experts to design and create prompt data that guide models in generating precise outputs.

of respondents in a recent survey said their organization adopted AI-generated synthetic data because of challenges with real-world data accessibility.*

- Data Types:Image, video, sensor (LiDAR), audio, speech, document, and code.

- Demographic Diversity:Age, gender identity, region, ethnicity, occupation, sexual orientation, religion, cultural background, 85+ languages and dialects, and more.

Supervised Fine-Tuning

Linguists, taxonomists, and subject matter experts across 85+ languages of native speakers create datasets ranging from simple to highly complex for fine-tuning across an extensive range of task categories and sub-tasks (90+ and growing).

of respondents in a recent survey said fine-tuning an LLM successfully was too complex, or they didn’t know how to do it on their own.*

- Sample Task Taxonomies:Summarization, image evaluation, image reasoning, Q&A, question understanding, entity relation classification, text-to-code, logic and semantics, question rewriting, translation…

- SFT Techniques:Change-of-thought, in context learning, data augmentation, dialogue…

Human Preference Optimization

Rely on human experts-in-the-loop to close the divide between model capabilities and human preferences. Improve hallucinations and edge-cases with ongoing feedback to achieve optimal model performance through methods like RLHF (Reinforcement Learning from Human Feedback) and DPO (Direct Policy Optimization).

of respondents in a recent survey said RLHF was the technique they were most interested in using for LLM customization.*

- Example Feedback Types:DPO (Direct Policy Optimization), Simple RLHF (Reinforcement Learning from Human Feedback), Complex RLHF (Reinforcement Learning from Human Feedback), Nominal Feedback.

Model Safety, Evaluation, & Red Teaming

Address vulnerabilities with Innodata’s red teaming experts. Rigorously test and optimize generative AI models to ensure safety and compliance, exposing model weaknesses and improving responses to real-world threats.

reduction in the violation rate of an LLM was seen in a recent study on adversarial prompt benchmarks after 4 rounds of red teaming.*

- Techniques:Payload smuggling, prompt injection, persuasion and manipulation, conversational coercion, hypotheticals, roleplaying, one-/few-shot learning, and more…

Why Choose Innodata for Your Generative AI Data Solutions?

Global Delivery Locations + Language Capabilities

Innodata operates global delivery locations proficient in over 85 native languages and dialects, ensuring comprehensive language coverage for your projects.

Quick Turnaround at Scale with Quality Results

Domain Expertise Across

Industries

With 6,000+ in-house SMEs covering all major domains from healthcare to finance to legal, Innodata offers expert annotation, collection, fine-tuning, and more.

Linguist + Taxonomy Specialists

Our in-house linguists and taxonomists create custom taxonomies and guidelines tailored to generative AI model development.

Customized Tooling

CASE STUDIES

Success Stories

See how top companies are transforming their AI initiatives with Innodata’s comprehensive solutions and platforms. Ready to be our next success story?