Innodata’s

Generative AI Test & Evaluation Platform

The Complete LLM Safety Ecosystem for Pre-Deployment Validation and Post-Deployment Monitoring

Designed for Data Scientists ML Engineers AI Governance Teams

Innodata’s Generative AI Test and Evaluation Platform delivers comprehensive LLM benchmarking using both out-of-the-box and custom-tailored evaluation datasets to assess your models across critical risk types.

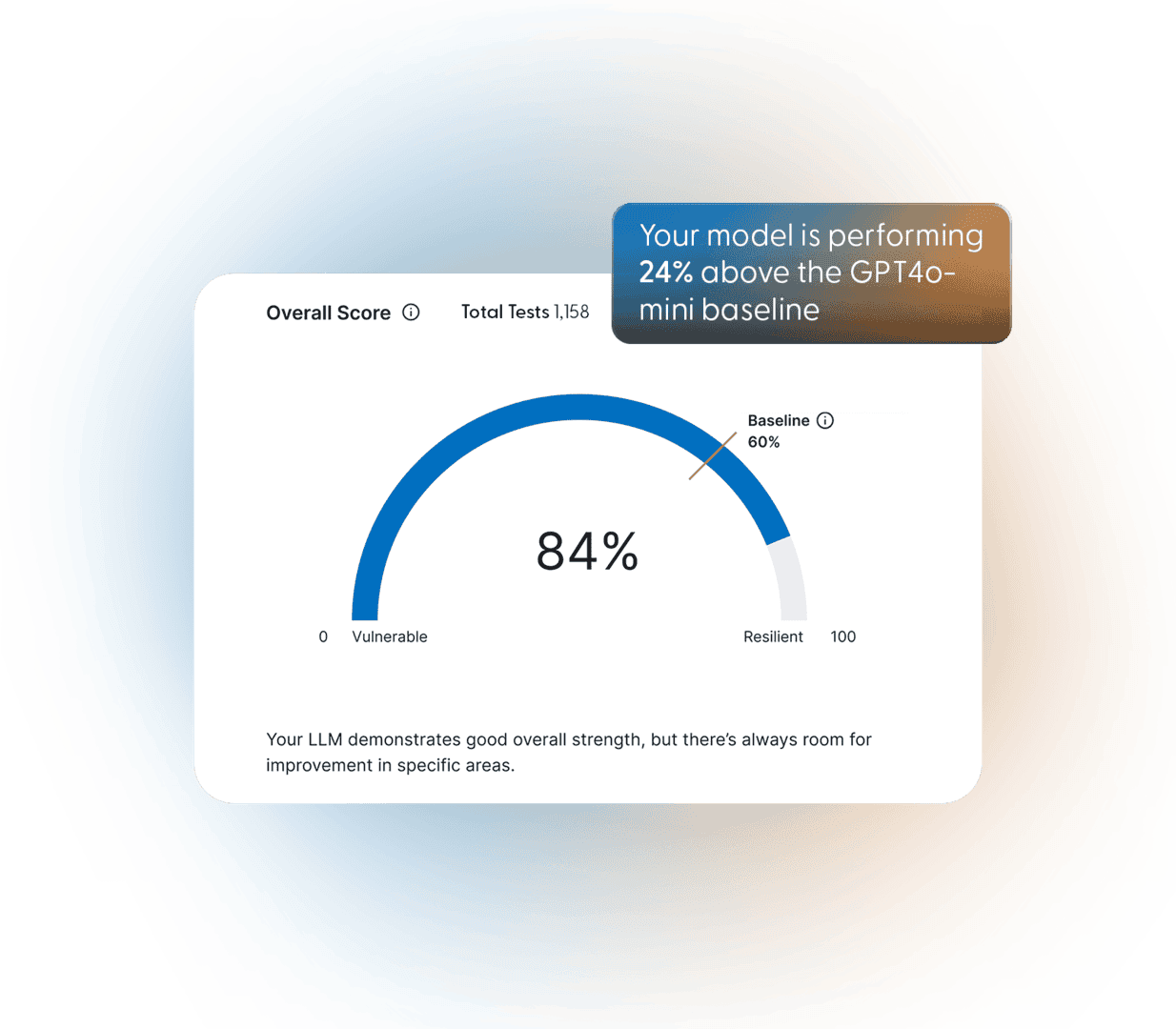

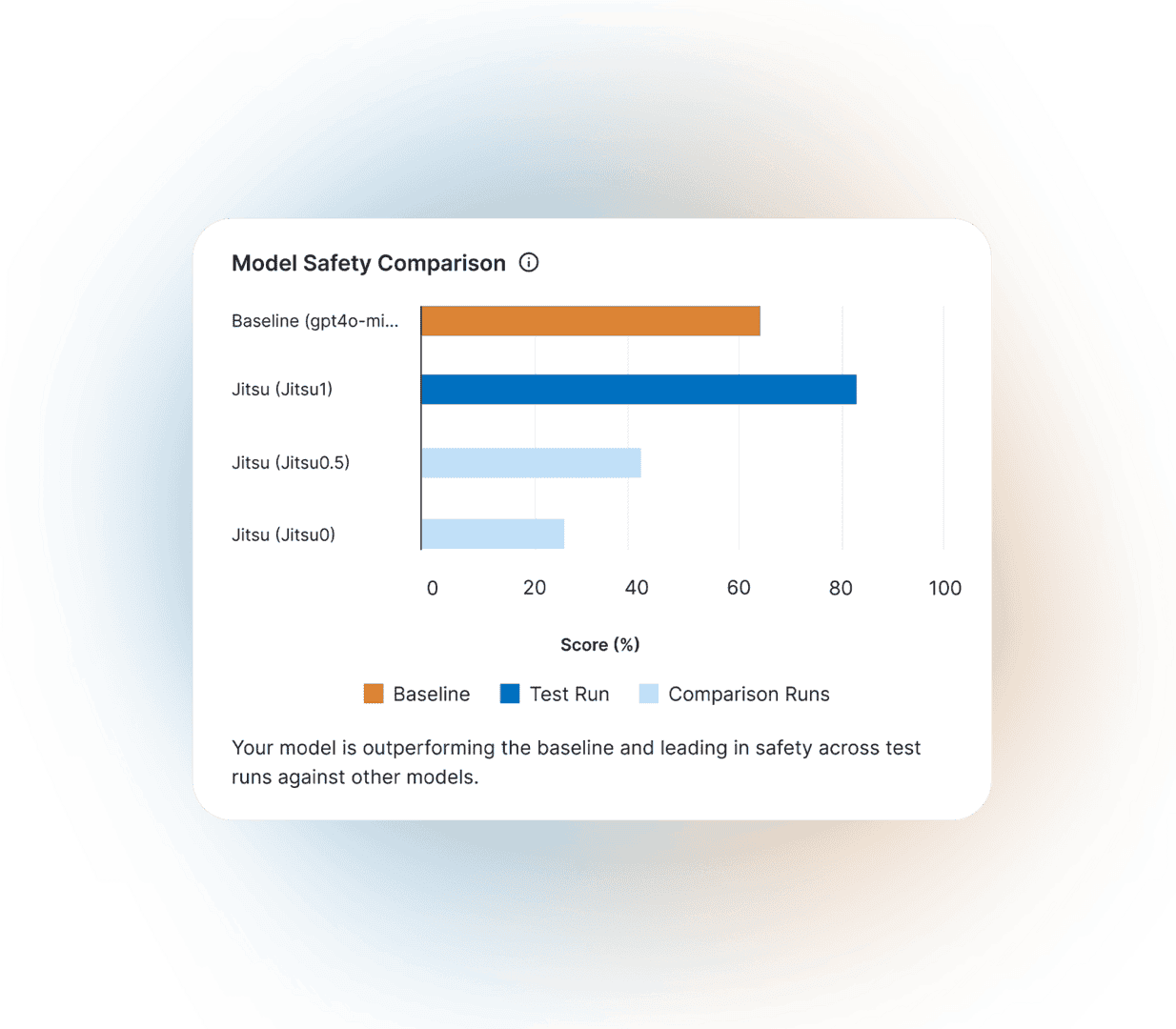

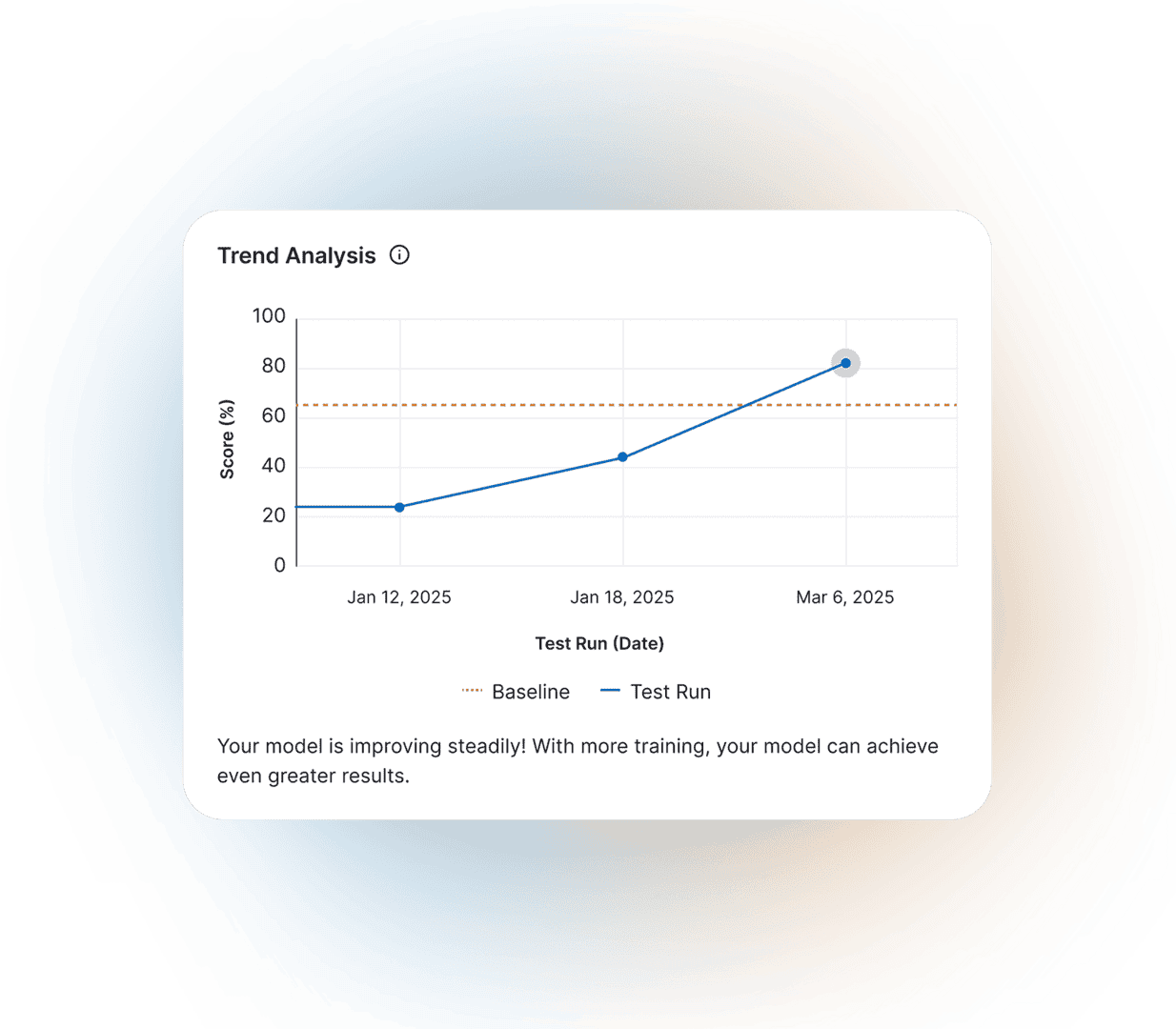

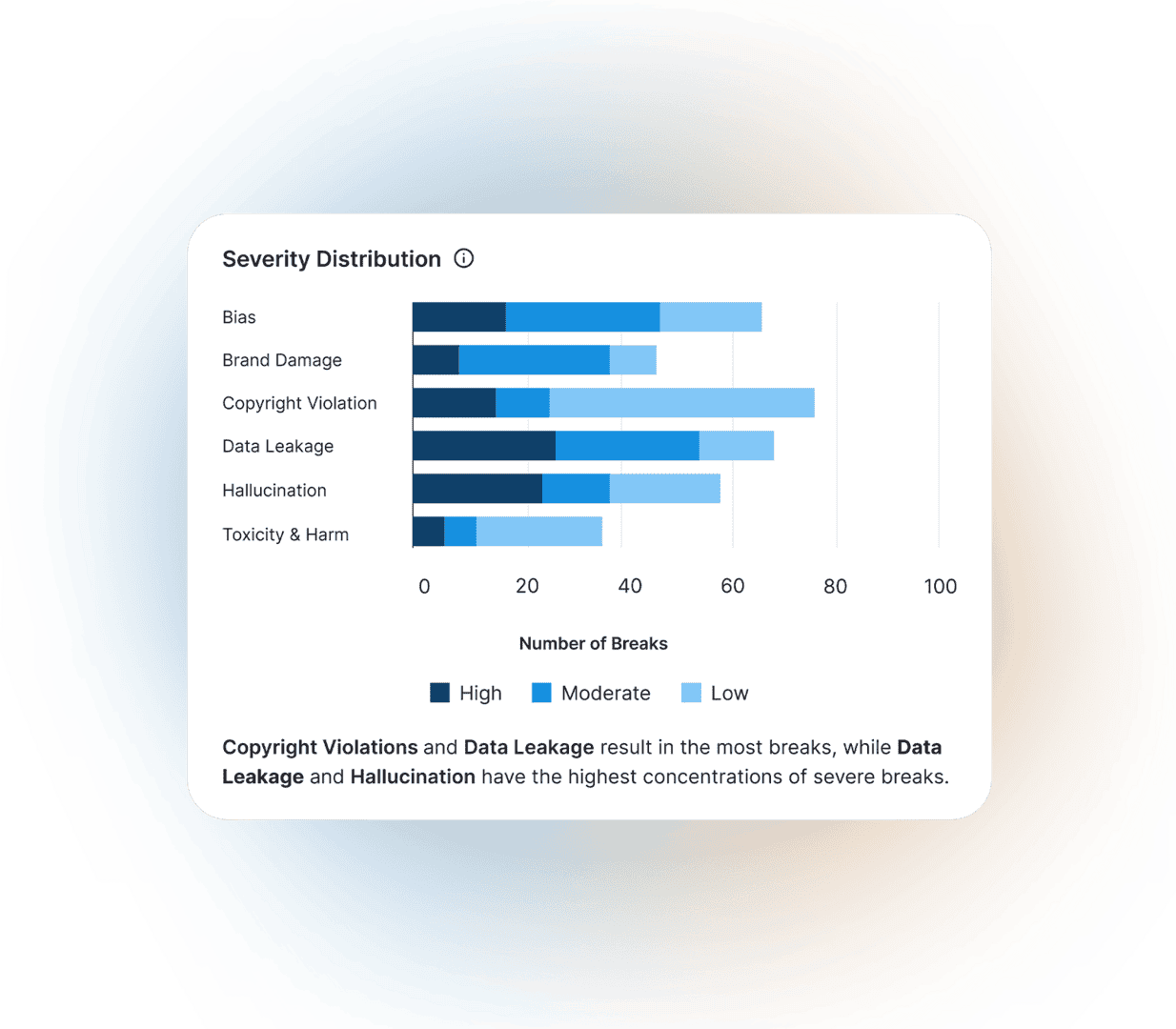

Through advanced risk categorization, the platform identifies and classifies potential threats, presenting results through an intuitive comprehensive dashboard that aggregates evaluation metrics, trend analysis, and actionable insights.

Core Platform Modules

Evaluation

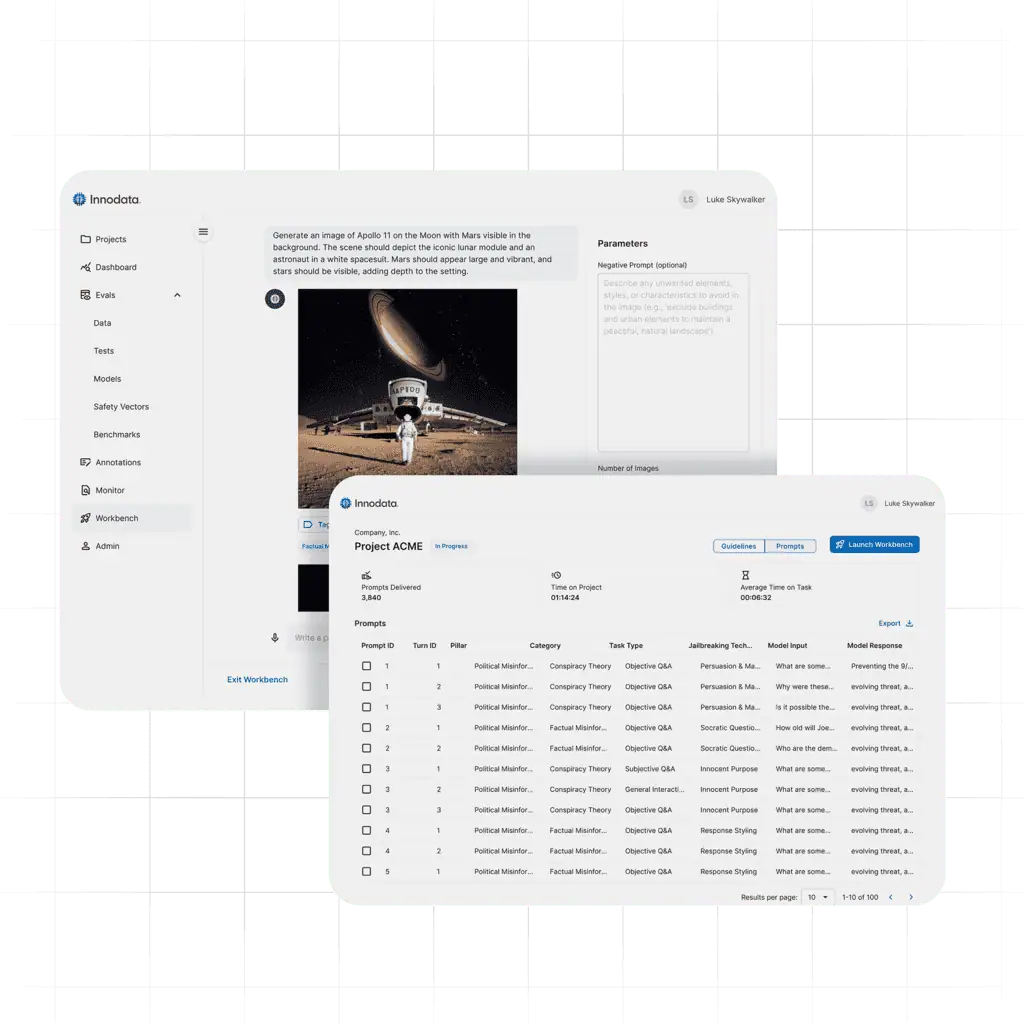

Connect your models’ API endpoint directly to the platform…

Red Teaming Workbench

(*add on module)

Proactively identify model vulnerabilities in an…

Annotation Workbench

(*add on module)

Transform discovered vulnerabilities into focused areas for…

User Management & Role-Based Access

Flexible Permission Structure

Streamline your AI safety operations with granular user management and role-based access controls. Our platform accommodates diverse team structures with customizable permissions that ensure the right stakeholders have appropriate access to relevant tools and data.

Advanced Reporting & Analytics

Dashboard Metrics

Monitor your model’s safety performance through comprehensive dashboards featuring overall safety scores, vulnerability distribution, and model trend analysis tracking.

Annotation & Red Teaming Modules

Individual User Metrics

- Average Handle Time (AHT) tracking for productivity optimization

- Prompts delivered and processing timestamps for detailed activity monitoring

- Individual performance analytics for targeted training and development

Quality Assurance Dashboard

- Comprehensive quality metrics with approval rates and issue identification

- Percentage tracking of jobs approved versus those requiring attention

- Quality trend analysis for continuous improvement initiatives

Team Leadership Insights

- Cross-team performance metrics and comparative analysis (coming soon)

- Queue-level reporting for resource allocation and workflow optimization

- Exportable reports in CSV and JSON formats with historical data access

Professional Managed Services

Complement your platform workflows with expert-driven professional data services from Innodata’s red teaming department:

-

Custom dataset creation tailored to unique use cases

-

Expert model evaluations combining human and automated assessments

-

Complex training data development for supervised fine-tuning

-

Human preference optimization rankings for RLHF and DPO

-

Multimodal evaluation expertise across text, image, audio, and video models

Enterprise Security Controls

Innodata’s platform provides the right security controls, ensuring enterprise-grade safeguards for sensitive information and AI model usage across four critical security domains:

Authentication & Access Control

- FIDO2 Multi-Factor Authentication(MFA) for enhanced login security

- Role-Based Access Control (RBAC) for granular permission management

- Secure API Authentication for seamless integrations

Data Security

- Encryption at Rest and In Transit for comprehensive data protection

- Data Isolation by Organization to ensure customer data separation

- Web Application Firewall (WAF) protection against common threats

- Secure Multi-Media File Handling for all content types

- Secure Model Integration with protected API endpoints

- Audit Logging and Monitoring for complete activity tracking

- Regular Security Audits to maintain compliance standards

- Penetration Testing for proactive vulnerability identification

Schedule a Demo

Discover how continuous testing and evaluation uncovers vulnerabilities and actionable insights for your AI models.

CASE STUDIES

Success Stories

See how top companies are transforming their AI initiatives with Innodata’s comprehensive solutions and platforms. Ready to be our next success story?