Quick Concepts

What are Vision Transformers (ViT)?

The world of computer vision, tasked with equipping machines with the ability to “see” and understand the visual world, has long been dominated by Convolutional Neural Networks (CNNs). However, a recent innovation – Vision Transformers (ViTs) – is making waves, challenging the established dominance of CNNs. Let’s delve into what ViTs are, how they work, and why they’re generating so much excitement in the field.

Traditional Approach: Convolutional Neural Networks (CNNs)

For years, CNNs have been the go-to architecture for computer vision tasks like image classification and object detection. CNNs work in a hierarchical manner, processing images pixel by pixel through multiple convolutional layers. These layers learn to detect edges, shapes, and progressively more complex features, culminating in the network’s understanding of the entire image.

While CNNs have achieved remarkable success, they have limitations. Their reliance on local convolutions can struggle to capture long-range dependencies within an image. Imagine a picture of a cat sitting on a mat. A CNN might excel at identifying edges and recognizing the shapes of the cat and mat individually, but it might struggle to grasp the overall relationship between the two objects – the cat sitting on the mat.

Introducing Vision Transformers (ViTs)

ViTs offer a fundamentally different approach to image recognition, drawing inspiration from the success of Transformers in Natural Language Processing (NLP). Transformers, known for their ability to analyze sequential data and capture long-range relationships between words in a sentence, are now being adapted to the visual domain.

Breaking Down an Image

The first step in a ViT involves dividing the input image into smaller squares or rectangles called patches. These patches are akin to words in a sentence. Just as a sentence is built from individual words, an image can be understood by analyzing the relationships between its constituent patches.

From Pixels to Embeddings: Patch Embeddings

Each image patch is then transformed into a lower-dimensional vector representation called an embedding. This embedding captures the essence of the information within the patch – its color, texture, and other visual characteristics.

The Core of ViTs: The Transformer Encoder

The heart of a ViT lies in its transformer encoder. This encoder consists of multiple layers, each employing a mechanism called self-attention. Self-attention allows the model to not only analyze each patch individually but also understand how each patch relates to all other patches in the image.

Imagine a ViT processing the image of the cat on the mat. Through self-attention, the model can learn that the patch representing the cat’s paw interacts with the patch representing the mat more than it does with patches depicting the background. This allows the ViT to build a comprehensive understanding of the spatial relationships between objects in the image.

Benefits of ViTs: Advantages Over CNNs

ViTs offer several potential advantages over traditional CNNs:

- Capturing Long-Range Dependencies: As mentioned earlier, ViTs excel at capturing long-range dependencies within an image. This allows them to better understand the relationships between objects and the overall scene.

- Data Efficiency: ViTs have shown promising results with smaller datasets compared to CNNs. This is particularly beneficial for tasks where acquiring large amounts of labeled data can be expensive or challenging.

- Flexibility: The Transformer architecture can be easily adapted to various computer vision tasks beyond image classification, including object detection and image segmentation.

Applications of ViTs: Beyond Image Recognition

The potential applications of ViTs extend far beyond traditional image recognition tasks. Here are a few exciting possibilities:

- Medical Imaging: ViTs could be used to analyze medical scans like X-rays and MRIs, potentially aiding in earlier and more accurate diagnoses.

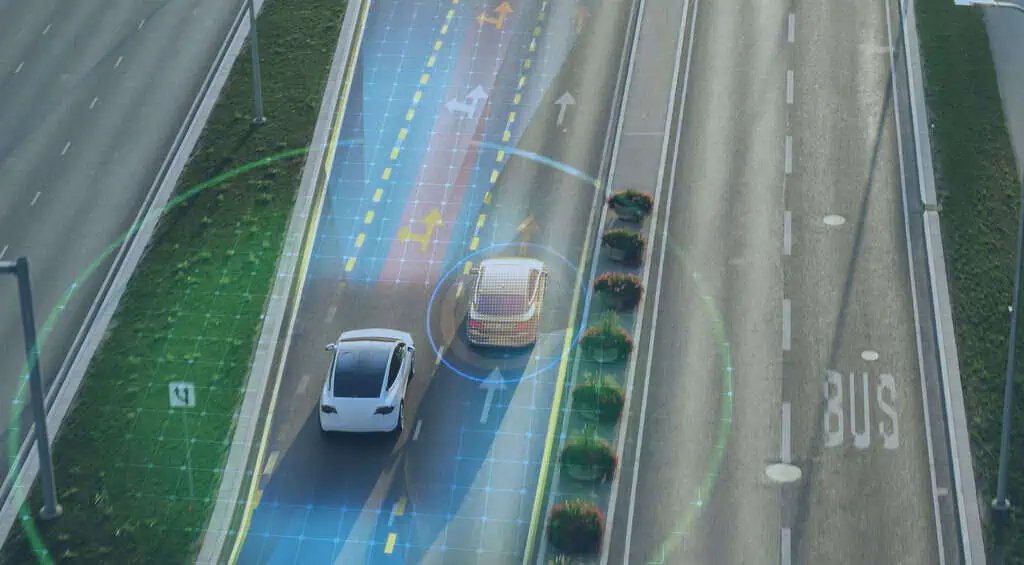

- Autonomous Vehicles: ViTs could be integrated into autonomous vehicles to help them understand complex scenes and navigate their surroundings more effectively.

- Robotics: Robots equipped with ViTs could gain a deeper understanding of their environment, allowing them to perform more intricate tasks.

Challenges and the Future of ViTs

While ViTs offer immense promise, they have challenges such as higher computational costs than CNNs and the need for significant resources to fine-tune them for specific tasks. Despite these challenges, research in ViTs is progressing quickly, with new techniques improving computational efficiency and the availability of pre-trained models making adaptation across tasks easier. ViTs represent a major advancement in computer vision due to their ability to capture long-range dependencies and achieve high data efficiency. As research continues to refine and address limitations, they are expected to become increasingly important in shaping the future of computer vision.

Ready to explore the possibilities of ViTs for your project? Innodata has the experience and resources to help you gather and prepare the massive datasets ViTs require to learn and excel. Let’s chat!

Bring Intelligence to Your Enterprise Processes with Generative AI

Whether you have existing generative AI models or want to integrate them into your operations, we offer a comprehensive suite of services to unlock their full potential.

follow us