Best Approaches to Mitigate Bias in AI Models

Machine learning (ML) and artificial intelligence (AI) are progressively more integrated into our everyday lives. The progression of ML and AI has had some backlash caused by bias in the machine learning systems. Even though AI and ML can offer many benefits, bias within programs can lead models to produce harmful outcomes. Bias occurs when algorithms deliver prejudiced results owing to incorrect assumptions in the machine learning process. This blog will examine how the implementation of preventative techniques can help reduce bias in AI systems.

Why It Is Important to Eliminate Bias?

There is increasing demand for AI integration, yet many organizations’ teams have not adequately prioritized data collection processes. If the data collection process is not examined thoroughly, long-term impacts on an organization’s reputation and negative consequences for the public occur. For example, systems skewed by a lack of diverse social representation can cause the AI tools to favor certain outcomes that further marginalize those erroneously left out of the sample.

The power of a machine learning system comes from its ability to interpret data and apply that knowledge to new data systems. It is imperative that the data used to train machine learning algorithms is clean, accurate, well-labeled, and free of bias so the machine learning systems are not skewed.

Types of Bias in AI

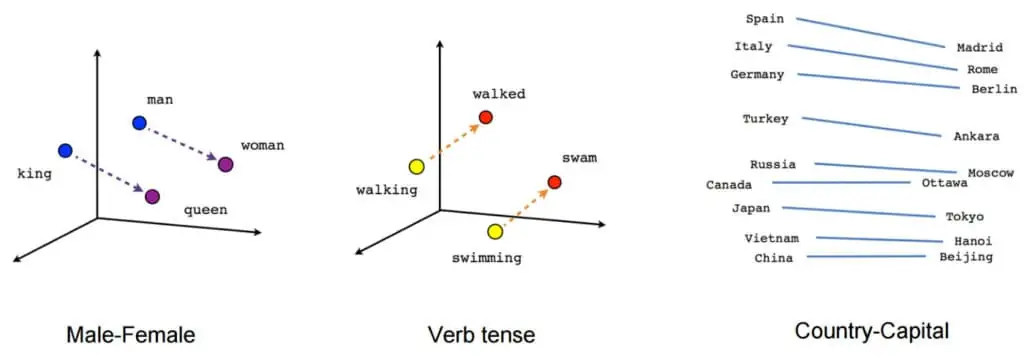

Word Embedding

Word embedding is a natural language processing problem of word association. In algorithmic terms, these are words that are close in the vector space and therefore are expected to have similar meanings. An example of this would be, if you search “king” only males would come up, since male is embedded in the definition of king. But what if you are searching for a doctor online, and it only serves up males? This bias could occur due to the input of data points that correlate “doctor” to men more than to women, edging women out of the model.

Sample Bias / Selection Bias

Sample bias or selection bias occurs when the dataset used is not large or representative enough to teach the system. If a survey were conducted on why people did not vote in an election, and the entire group of people surveyed were exiting a shopping mall, it would theoretically exclude people who are not necessarily from that area, which can cause bias in the sample chosen.

Prejudice Bias / Association Bias

When training data for a machine learning model has been compromised by prejudices and stereotypes, the model perpetuates those learned biases. For example, if someone associates only expensive produce with quality, then if they buy produce that is more expensive and it is better quality, they will continue to associate expensive produce with better quality.

Exclusion Bias

Exclusion bias happens when valuable data is deleted because it is thought to be unimportant. For example, imagine you have a dataset for consumer pick-up truck sales in the United States and you are trying to study conversion rates. If you are capturing data and decide to exclude gender data points, you would miss the fact that 70% of clicks converted were from men and 30% from women. This can lead to an inaccurate representation of the data collected.

Measurement Bias

This bias can occur when there are problems with the measurement and precision of the training model due to human irregularities in the output of data. For example, when survey participants are faced with personal questions that they are embarrassed to answer truthfully, they answer incorrectly, which distorts the results.

Algorithm Bias

Algorithmic bias can occur if there is a flaw in the machine learning model, wherein it picks up an incorrect pattern and reapplies it. For example, women are shown more ads for jobs as secretaries simply because historically more woman than men have been in that type of field.

Anchoring Bias

Anchoring bias occurs when choices on metrics and data are based on personal experience or preference for a specific set of data. Models built on the preferred set, which can be incomplete or contain incorrect data, can lead to invalid results. For instance, if there is a coat for $200 and another for $100, you are prone to see the $100 coat as cheap or inferior. The “anchor,” the $200 coat, unduly influenced your opinion.

Confirmation Bias / Observer Bias

Confirmation bias leads to the tendency to process or look for data or model results that align with one’s currently held beliefs. The generated results and output can strengthen one’s confirmation bias, which leads to inferior outcomes. If someone met a short person who was creative, they could continue to assume other short people are just as creative. They hold this observation as “evidence” and will remember this detail that upholds their belief.

5 Practices to Mitigate Bias in AI Models

1. Dataset Selection

While AI and ML bias can be challenging to mitigate, there are preventative techniques that can help to reduce this problem. The first challenge in identifying bias is seeing how some machine learning algorithms generalize learning from the training data. The selection of datasets used for the model needs to represent the real world. If the dataset only includes a subset of a population, the outcomes will be altered.

2. Diverse Teams

Diversity in your team is the best way to begin eliminating bias. Diversifying a team can have a major positive impact on machine learning models by producing well-rounded, representative datasets. Having a diverse development team can help mitigate prejudicial bias in the architecture of datasets and having a diverse annotator team can assist in lessening prejudicial bias in how taxonomies are applied to the data.

3. Reduce Exclusion Bias

To help reduce exclusion bias in AI from occurring, feature selection is key. When developing quality models, it is important to filter out irrelevant or redundant data points from the model. This specific task excludes the data points that do not have enough variance to explain the outcome variable.

4. Humans-in-the-Loop

Another way to start resolving bias in AI is to have humans–in–the–loop to actively identify patterns of unintended bias. This reduces flaws in the system, creating a more neutral model. Organizations should also set guidelines and procedures that identify and mitigate potential bias in datasets. Documenting cases of bias as they occur, outlining how the bias was found, and communicating these issues can help ensure past instances of bias are not repeated.

5. Representative Data

Prior to aggregating data for model training, organizations should understand what representative data looks like. The substance of the population and the characteristics of data used should equate to a data set with the least amount of bias. Along with identifying the potential bias in datasets, organizations should also document their methods of data selection and cleansing, to eliminate root causes.

Address Bias Before It Corrupts Your Model

AI holds great potential to automate tedious tasks, improve decision-making, and facilitate greater freedom. As models improve and our dependence on them increases, we must be vigilant to ensure that bias does not corrupt the efficacy of models or corrode our trust in them. Potential bias must be considered at every stage of model development, from model architecture to data collection to data annotation, through iterative testing, analysis, and retraining. Customized data annotation solutions and SaaS data annotation platforms can help ensure that AI fulfills its potential as an agent of progress.

follow us