LLM Quality Assurance as a Service

Ensure Your GenAI Applications Are Production-Ready with Enterprise-Grade QA

Innodata delivers scalable, repeatable QA for large language models — combining automation, human expertise, and best practices to ensure your GenAI outputs are accurate, safe, and ready for production. Our proven evaluation framework benchmarks LLM performance for response relevance, accuracy, brand alignment, and safety—enabling continuous improvement, faster launches, and higher model confidence.

Why LLM QA Matters

Today’s GenAI products drive customer interactions, product discovery, internal tools, and user-generated content at scale. But without a systematic QA process, LLMs are vulnerable to:

LLMs hallucinate, drift, and misclassify — QA identifies silent failures before they impact users.

Tone, refusal, and safety must align with brand — QA ensures output is appropriate, trustworthy, and policy compliant.

Factual errors damage trust — QA validates responses against gold or known-correct answers.

Toxicity and unsafe content must be prevented — QA enforces safety, brand, and community standards.

Inappropriate refusals degrade experience — QA flags unnecessary model refusals that block valid user needs.

Tone misalignment impacts user trust — QA ensures the output matches brand voice and user expectations.

Query logs are goldmines — QA analyzes them to surface unreported failures, broken or unanswered prompts, and behavioral anomalies, enabling proactive prompt tuning and model fixes.

Continuous updates introduce continuous risk — Frequent prompt, model, or API changes demand regression testing.

Multi-step logic introduces complexity — Agentic systems and copilots require QA for reasoning chains, memory, and tool use.

RAG systems must be grounded — QA verifies that generated responses truly reflect the source documents.

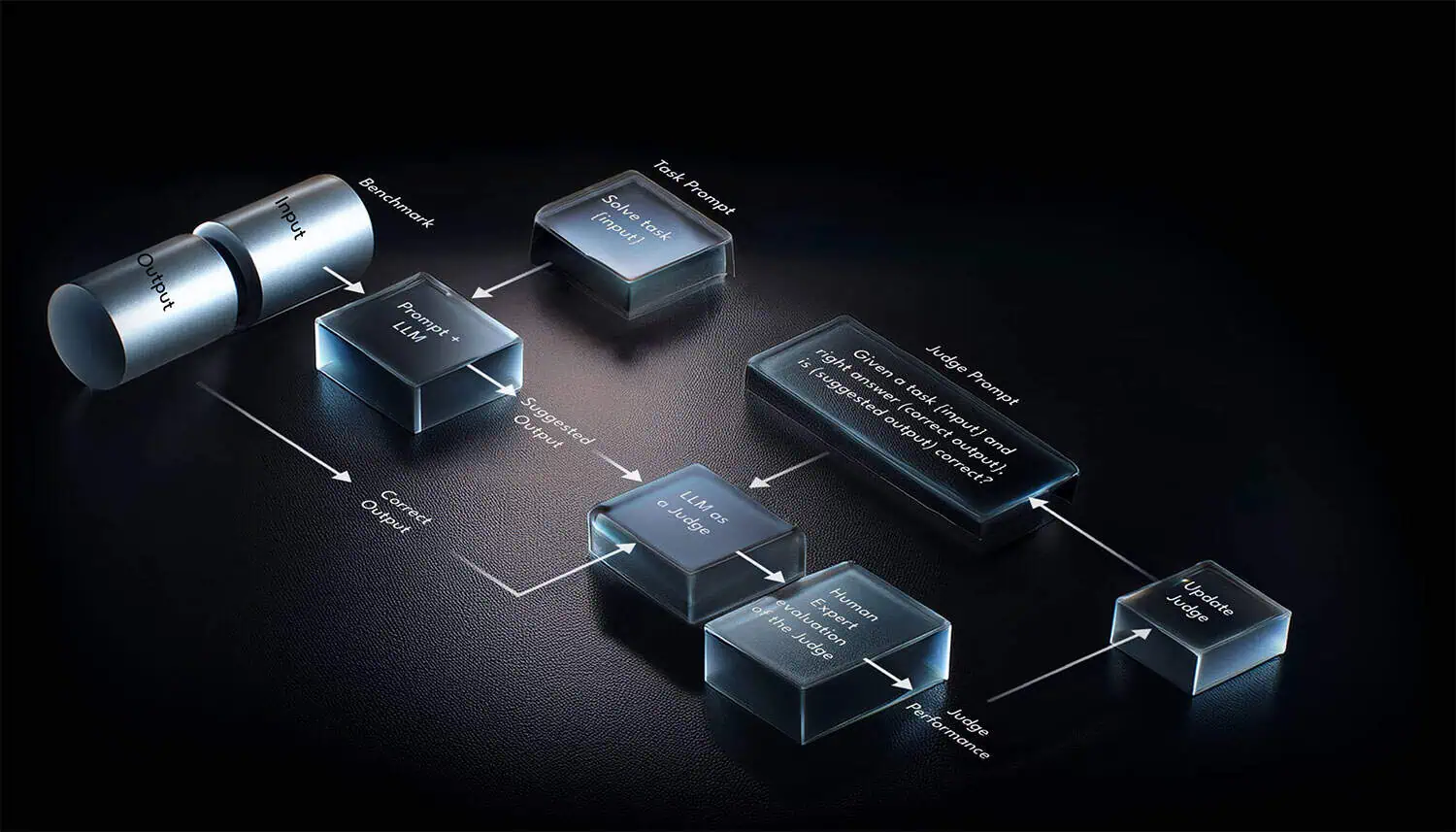

Innodata’s specialized QA approach addresses these challenges through structured testing frameworks. An automated Model Test and Evaluation Platform, scalable LLM-as-a-Judge techniques, human-in-the-loop validation, and continuous improvement loops aligned with real-world production behavior.

How We Measure LLM Quality

Innodata applies a structured QA rubric to evaluate every model output across 12 enterprise-grade dimensions:

Relevance – Does the response directly address the prompt or question?

Contextualization – Is the response aligned to the appropriate context, such as user role, intent, or product nuance?

Accuracy – Is the information factually correct and free from hallucinations?

Formatting – Is the output well-structured, readable, and optimized for UX?

Completeness – Does the output include all necessary elements to fully answer or fulfill the request?

Flexibility – Can the model adapt to variations in phrasing, ambiguity, or changing inputs?

Parameterization – Does the model maintain consistency across controlled variables (e.g., tone, persona, instruction following, ambiguity and complexity of prompt)?

Performance – Is the output delivered within expected latency or within defined UX performance thresholds?

Toxicity & Safety – Is the output free from offensive, unsafe, or policy-violating content?

Response Evasiveness – Does the model avoid unnecessary refusals?

Brand Alignment – Does the response reflect the intended brand tone, friendliness, and personality?

Groundedness (if RAG) – Is the output faithfully derived from retrieved or referenced content? (Only for RAG-based systems)

Built-In QA Metrics

Our teams track and report key performance indicators such as:

100%

Coverage for New Features, Flows & Prompts

≥85%

Regression QA Coverage across top tasks, intents, or queries

≥80%

Rubric Pass Rate across all 12 dimensions

0

Guardrail Breach Tolerance

(toxicity, bias, compliance issues)

≥95%

Multi-turn Stability Rate (for agents and copilots)

≥90%

Tool Use Accuracy (for API-integrated or agentic LLMs)

≥90%

RAG Faithfulness Score (citation matches and retrieval grounding)

All Metrics Customizable to Your Success Criteria

Want to see how we define “quality”?

Innodata’s Proven Approach for Scaling LLM QA Testing

We provide full-spectrum QA services, purpose-built to support the evolving lifecycle of GenAI applications:

-

1Pre-Testing Setup

- Requirements Traceability Matrix (RTM)

- Golden and synthetic dataset creation

- Testbed configuration: prompt templates, models, environment access and configuration

-

2New Feature Testing

- Prompt evaluation

- Functional and non-functional validation

- Fall-back logic and success criteria coverage

-

3Regression & Log-Based Testing

- Baseline comparison

- Refreshing test cases from user logs

- Hallucination and toxicity detection

-

4Human-in-the-Loop + Automation

- LLM-as-a-Judge automation for scalable scoring

- Expert evaluation and error categorization

- Dual evaluation methods: freeform response grading + MCQ-style knowledge checks

- Gold answer benchmarks and rubric-based scoring across 12 dimensions: relevance, factuality, brand alignment, refusal handling, groundedness, etc.

- Human-in-the-loop reviews and audit-of-audits to ensure rubric alignment

Why Innodata

Proven LLM QA Expertise

- 87M+ LLM labels delivered YTD across 15+ GenAI workflows

- Specialists in hallucination detection, groundedness scoring, brand alignment compliance, and refusal analysis

- Repeatable QA metrics to inform model tuning and roadmap alignment.

- Structured QA frameworks for copilots, agents, RAG, and embedded LLMs—adapted for task and product context

- Data-driven insights that inform model tuning, prompt optimization, and roadmap alignment

Hybrid QA Model

- 87M+ LLM labels delivered YTD across 15+ GenAI workflows

- Specialists in hallucination detection, groundedness scoring, brand alignment compliance, and refusal analysis

- Repeatable QA metrics to inform model tuning and roadmap alignment.

- Structured QA frameworks for copilots, agents, RAG, and embedded LLMs—adapted for task and product context

- Data-driven insights that inform model tuning, prompt optimization, and roadmap alignment

Global, Enterprise-Ready Delivery

- 87M+ LLM labels delivered YTD across 15+ GenAI workflows

- Specialists in hallucination detection, groundedness scoring, brand alignment compliance, and refusal analysis

- Repeatable QA metrics to inform model tuning and roadmap alignment.

- Structured QA frameworks for copilots, agents, RAG, and embedded LLMs—adapted for task and product context

- Data-driven insights that inform model tuning, prompt optimization, and roadmap alignment

Ready to Launch Confidently?

Whether you’re tuning a sales assistant, scaling an AI companion, or deploying a GenAI support tool—Innodata ensures your LLMs are ready for production.

Schedule a Free QA Consultation

Let’s talk about your model goals and testing needs.

CASE STUDIES

Success Stories

See how top companies are transforming their AI initiatives with Innodata’s comprehensive solutions and platforms. Ready to be our next success story?

Data collection in AI involves gathering diverse and high-quality datasets such as image, audio, text, and sensor data. These datasets are essential for training AI and machine learning (ML) models to perform tasks like speech recognition, document processing, and image classification. Reliable AI data collection ensures robust model development and better outcomes.

Innodata provides comprehensive data collection services tailored to your AI needs, including:

- Image data collection

- Video data collection

- Speech and audio data collection

- Text and document collection

- LiDAR data collection

- Sensor data collection

- And more…

Synthetic data generation creates statistically accurate, artificial datasets that mirror real-world data. This is especially beneficial when access to real-world data is limited or sensitive. Synthetic data helps with:

- Data augmentation to expand existing datasets.

- Privacy compliance by generating non-identifiable replicas of sensitive data.

- Generative AI applications requiring unique or rare scenarios.

- And more…

Innodata offers synthetic training data tailored to your specific needs. Our solutions include:

- Synthetic text generation for NLP models.

- Synthetic data augmentation for enriching datasets with diverse scenarios.

- Custom synthetic data creation for unique edge cases or restricted domains.

- And more…

These services enable efficient AI data generation while maintaining quality and compliance.

Innodata’s data collection and synthetic data solutions support various industries, such as:

- Healthcare for medical document and speech data collection.

- Finance for document collection, including invoices and bank statements.

- Retail for image data collection, such as product images.

- Autonomous vehicles for LiDAR data collection and sensor data.

- And more…

If you’re looking at AI data collection companies, consider Innodata’s:

- Expertise in sourcing multimodal datasets, including text, speech, and sensor data.

- Global coverage with support for 85+ languages and dialects.

- Fast, scalable delivery of training data collection services for AI projects.

Yes, our synthetic data for AI solutions enhance existing datasets by creating synthetic variations. This approach supports AI data augmentation, ensuring diverse training scenarios for robust model development.

We deliver high-quality datasets, including:

- Image datasets such as surveillance footage and retail product images.

- Audio datasets like customer service calls and podcast transcripts.

- Text and document datasets for financial, legal, and multilingual applications.

- Synthetic datasets for generative AI, tailored to your specific requirements.

- And more…

Synthetic data replicates the statistical properties of real-world datasets without including identifiable information. This makes it an excellent option for training AI models while adhering to strict privacy regulations.

Data collection involves sourcing real-world datasets from various modalities like image, audio, and text, while data generation creates artificial (synthetic) data that mimics real-world data. Both approaches are crucial for building versatile and high-performing AI models.

Yes, we offer LiDAR data collection for applications in autonomous vehicles, robotics, and environmental analysis, ensuring high-quality datasets for precise model training.