Data-Centric AI: Optimizing Data for Generative AI Fine-Tuning

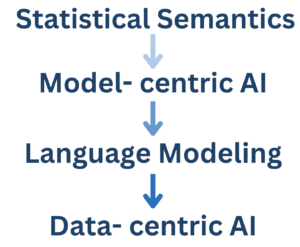

In this era of Generative AI, where artificial intelligence is continually pushing boundaries and expanding its potential, data is more pivotal than ever. Artificial intelligence (AI) has experienced both evolutionary and revolutionary change: from statistical semantics to model-centric AI to data-centric AI, the journey has culminated in a present-day focus on generative AI, redefining how we harness AI’s potential. Data-centricity is the basis of fine-tuning in generative AI, where a limited amount of data must go a long way, and data quality makes or breaks the model’s performance. The following is a discussion of the history, best practices, and tools of data-centric AI as applied to data preparation for model training and fine-tuning.

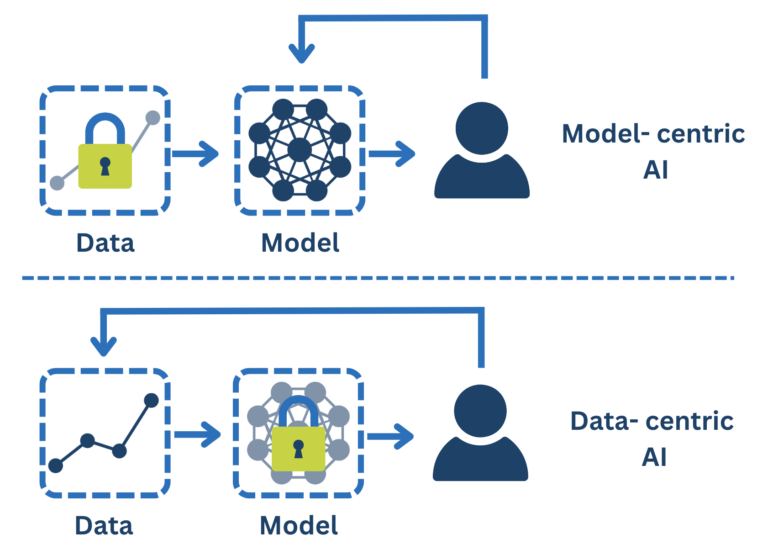

What is Data-Centric AI?

Data-Centric AI is a mindset that places data quality above model development. In today’s landscape, impressive neural network architectures and generative AI models are widely accessible. In addition, building one’s own generative AI model is beyond the scope of most small businesses.

Hence, the era of model-centric approaches is declining, yielding minimal incremental gains for all but the largest organizations. Fine-tuning existing generative AI models using a data-centric approach is now one of the most efficient ways for organizations to reap the benefits of generative AI.

Drawing a parallel to Andrew Ng’s analogy of AI as the new electricity, data embodies immense untapped potential energy. As the backbone of AI, data holds the key to exceeding the limits of model-centric methodologies.

The Evolution of Data-Centric AI

The idea of data-centric AI was decades in the making. It is a product of a series of technological advancements and shifts in thinking. As the scope of AI’s potential has widened, so has the focus sharpened on the data that feeds it.

Statistical Semantics

Emerging in the early 2000s, statistical methods and the era of ‘big data’ catalyzed a paradigm shift in machine learning. The profound impact of processing vast datasets became evident in remarkable tools such as Google Translate. This marked the age of ‘statistical semantics,’ a period characterized by transformational data-driven insights on language processing and machine translation accuracy.

Model-Centric AI

The subsequent years between 2010 and 2015 witnessed the ascendancy of Neural Networks, bolstered by the power of GPUs. This convergence unleashed unprecedented computational prowess, turning statistical semantics problems into optimization tasks within deep neural networks. The community’s momentum led to the rise of model-centric AI, with transformative architectures like CNNs, LSTMs, and the game-changing Transformer.

During this era, Innodata’s foray into the field began when they established “Innodata Labs” in 2016, showcasing early steps in neural network adoption. As the excitement of model-centric AI grew, legacy systems were reimagined and accuracy surged, amplifying the potential of AI solutions.

Language Modeling

The introduction of large language models around 2018 accelerated progress. Swift training and improved generalization paved the way for innovations like prompt engineering and versatile text-to-text systems. However, over the past four years, the technology’s foundation has stabilized, prompting a shift towards a more profound consideration: the role of data in driving AI excellence.

Data-Centric AI

In the words of many product managers, “Give me 80% accuracy and we’re good!” This perspective, while common, often falls short in practice. For intricate documents like medical records or legal and financial documents, which demand high-quality outputs, the difference between 80% and perfection is immense. The reality is that the last mile, the pinnacle of AI performance, revolves around data.

Data-centric AI is where the final breakthroughs occur. Beyond the allure of bigger language models and sophisticated tricks, it is meticulous attention to data that defines success. Accuracy gains become cost savings, particularly in domains where errors can be costly. As the saying goes, “All eyes on the data.”

Why is Data-Centric AI important?

Systematic Labeling Errors

Is your model underperforming? The blame often lies with flawed data, a legitimate assertion in many cases. Consider the MNIST dataset – a staple for data scientists, serving as the ‘Hello World’ of machine learning. Although seemingly straightforward – consisting of 70k handwritten digits – this dataset harbors legitimate annotation errors. Despite expectations of unambiguous labeling, discrepancies persist. It is now acknowledged that a fraction of the data contains errors, imposing a theoretical ceiling on achievable accuracy. The best systems attain an error rate of 0.17%, with some claims of perfection met with skepticism due to potential data leakage.

In 2019, Lyft unveiled the extensive “Level 5 Open Data,” a dataset for autonomous vehicles. On the surface, it appeared flawless, featuring meticulous annotations and overlays. However, Stanford researchers uncovered a startling truth – over 70% of the validation data lacked at least one bounding box annotation.

This phenomenon permeates existing datasets. MIT researchers quantified systematic labeling errors within popular benchmarks, revealing error rates as high as 5%. These inaccuracies serve as a performance limit for AI systems. Notably, Neural Networks can even learn these errors and propagate them in real-world predictions.

Even in seemingly straightforward data capture scenarios, room for annotation errors persist. For instance, capturing and normalizing interest rates within investment prospectuses might seem straightforward. However, ambiguity surfaces as annotators employ various methods, leading to inconsistent representations like 4.2%, 0.42, 4.2, and 4.20%. The machine must recognize these variations, overcoming the extra complexity added by the annotators’ diverse approaches, further emphasizing the importance of data quality and precision in AI model training and fine-tuning.

How does Data-Centric AI Work?

In a data-centric approach, the machine learning engineer spends most of her time on the data (keeping the algorithm and other resources fixed).

Role of the engineer:

- Tools to enforce consistent and learnable annotations

- Tools to analyze blind spots, bias, inconsistencies, noisy labels

- Tools to augment data

- Tools to collect data more efficiently

- Tools to select the best data to annotate next

1. Tools to enforce consistent and learnable annotations

Consistency is king. A dataset is consistent when multiple annotators do the tagging in exactly the same way. Innodata primarily uses two tools for consistency:

- Annotation guidelines detailing what to extract (e.g., name of a person in a contract), when to extract it (extract the name when it’s a person, not when it’s a company), where to extract it (name might appear in multiple locations), how to extract it (should we normalize, recapitalize, markup first name/middle name/last name, etc.)

- Double pass workflows with arbitration, where two independent annotators work on the same document and their work is compared in order to detect differences. This approach would identify cases such as the earlier example, where one annotator might a percentage with two decimals (4.20%) while another would ignore trailing zeros (4.2%). All differences are arbitrated by a third annotator who takes a final decision, briefs the annotation team, and augments the annotation guideline with clarifications when needed. In this case, they could inform all annotators that a percentage must always be captured and normalized to two decimals (4.20%)

Learnability means that the problem is defined in a way that takes into account the strengths and limitations of the machine learning model at hand. In the context of Innodata’s Document Intelligence platform, for example, it means:

- A taxonomy builder for an analyst to define a data model within the limits of the possible. This tool allows for defining data types, grouping data, and defining mandatory and optional fields.

- A task-specific annotation workbench where the annotation work is as foolproof as possible. The workbench warns the user if annotations are incomplete (e.g., entity relation requires linking 2 entities minimum) or invalid (e.g., intersecting bounding boxes), and allows the user to visualize progress (e.g., highlighted sequence).This is usually tightly integrated with the annotation guidelines. Since each task is different, what is possible in one task might be invalid in another.

2. Tools to analyze blind spots, bias, inconsistencies, noisy labels

When people think of the problem of bias in AI systems, they mostly think about gender bias, racial bias and other social biases that render systems unfair and even dangerous. However, bias is a complex topic that can easily go beyond the capabilities of modern toolkits.

Here are two ways to check data ‘distribution,’ while recognizing that there is a layer of ethics and judgment required to analyze data in the real world.

- Label and feature distribution:

- Are labels (classes) representative of the real world? Biased labels, in this context, refer to labels that do not fit the real world. A grocery store dataset where fresh fruits and vegetables are ignored because they cannot be tracked properly, for instance, would be biased in representing only meat, canned food, etc.

- Similarly, are all features sufficiently represented? This is straightforward to automate: you can calculate feature average and standard deviation, check for a long-tail in the distribution, and put the numbers in bar charts, pie charts, tables, word clouds, etc. This step can inform active learning (see below) or – with the help of a data scientist – inform data curation, generate synthetic data, or take other corrective actions.

Analyzing a dataset in such a way is a mandatory step in data science. There are plenty of examples on Kaggle, for instance: people ‘show’ the data before building a model. Here is a simple, visual example selected at random.

- Collisions and frequent error detection are two simple techniques for finding dataset problems. When two documents or instances have exactly the same feature representation but are labeled differently, this indicates with certainty that there is a labeling error or the features are not expressive enough. Similarly, if a cluster of documents is consistently incorrectly predicted in the test set, they are either mislabeled or underrepresented.

3. Tools to augment data

Data augmentation techniques such as adding noise or substituting data in existing documents are now standard in computer vision (flip an image, blur an image, zoom in, zoom out, add a filter, etc.) and getting traction in other modalities. More and more, we see the use of machine translation and synonym substitution, for instance. Or, in the example of entity extraction from text: imagine there are paragraphs from which you need to extract names of people. Once properly marked up, you can use generative AI to augment the dataset size significantly.

Here is a made-up example. If we prompt a generative model with these three sentences:

- <per>Jim</per> bought 300 shares of <org>Acme Corp.</org> in 2006.

- <per>Michael Jordan</per> is a professor at <org>Berkeley</org>.

- The automotive company created by <per>Henry Ford</per> in 1903 can be referred to as <org>Ford</org> or <org>Ford Motor Company</org>.

It produces the following new sentence which can be seen as ‘almost free’ training data:

- <per>William Kennedy</per> is the CEO at <org>Ford</org>.

Synthetic data (creating artificial documents) is becoming increasingly powerful, as it provides solutions to various real-world problems:

- It enables working on a model-centric data flow without waiting for the real data. It is seen as a good practice to always ‘check’ that a model works and can perfectly predict synthetic data before looking at the real data.

- It enables demonstrating the feasibility of a system even if data is scarce or private.

- It enables generating data for classes or features that are very rare (e.g., text in small population dialects) or long tail (physicians’ prescriptions for all possible drugs).

- It includes photorealistic and physics-based simulations.

Programmatic annotations (creating annotations using rule-based systems) is another trend in the field. A legacy expert system built over decades can be used to ‘annotate’ documents and create large training datasets, for instance. Or, simple pattern matching rules can be used to ‘prime the pump’ and create synthetic data.

4. Tools to collect data more efficiently

Academic projects and Kaggle competitions generally operate on fixed datasets. Solving a business problem with ML is quite different: the data is perpetually changing. Most of the time, data is produced in real-time and its properties and distribution can vary greatly over time. In a way, data collection becomes a life-long process that must be kept economically viable.

- The online feedback loop is an example of data science and engineering prowess. It involves intercepting the right data at the right time in a business workflow, running machine predictions, and capturing as much user feedback as possible. Most of the time, the annotation is incomplete (e.g., users might flag or discard errors without fixing them) and documents must loop back into an annotation workflow. Also, there is typically a significant amount of coupling across the workflow, which means that small changes in how the business runs might break the machine learning loop and render it useless. Google published a landmark paper in 2015 describing everything that can go wrong when building an online feedback loop.

- ML-centric UI / UX: changing the existing user experience is always difficult. “Adding work” to the end user in order to capture data well-suited for ML often initially meets resistance. Users expect automation and simplification, yet here it is often the other way around: they are asked to work differently in order to train the machine. Finding the right compromise, that preserves user quality of life while unlocking the promise of AI, requires expertise in UI/UX.

5. Tools to select the best data to annotate next

Borrowing its name from educational contexts where students tailor their learning path, active learning has found a transformative application in machine learning. This methodology revolves around the judicious selection of documents from vast, unlabeled datasets, in order to determine which documents to annotate next.

The data requirements for model training and their associated costs are considerable, often involving specialized and sometimes expensive subject matter experts (SMEs). When each document’s annotation bears a $1 price tag and the aspiration is to assemble 50k documents to construct a robust ML model, an upfront budget of $50k is imperative. Similarly, if a monthly budget of $1k is allocated for continuous model maintenance, the strategic question becomes: How can this investment be optimized for the utmost utility?

Within this framework, active learning addresses some fundamental questions:

- Pool-Based Sampling: Delving into pool-based sampling, the question is: can exceptional accuracy and robustness be attained by selecting a subset of the initial collection—say 20k, 30k, or 40k documents—instead of the complete 50k, thereby yielding substantial cost savings? This approach benefits from a comprehensive view of the entire dataset, enabling the evaluation of data factors such as uniqueness, distribution, chronological context, and more.

- Stream-Based Selective Sampling: Transitioning to stream-based selective sampling, the emphasis shifts to real-world scenarios. Once a model is deployed, maintaining its accuracy assumes paramount importance. This entails strategically selecting a limited number of examples for continuous annotation. The challenge now is devising criteria to guide the selection process—should an example be annotated if it lacks confidence, deviates from the known distribution, or introduces novel feature values? Maximizing value within a fixed budget framework hinges on these decisions.

Active learning and stringent data quality control are at the heart of data-centric AI.

Conclusion

In a time when machine learning algorithms and generative AI models are widely accessible, the quality of data continues to be a pivotal factor in determining the performance of these models. Unlocking the potential of automation and substantial cost savings hinges on thoughtful, cost-efficient model training and fine-tuning of models using a data-centric AI approach. This includes a number of tools that address bias in training data, accurate and consistent data labeling, data selection and augmentation, and data collection. As generative AI adoption and integration proliferate, a data-centric approach to model training and fine-tuning emerges as the engine that powers model performance and propels organizations into the future.

Bring Intelligence to Your Enterprise Processes with Generative AI

Whether you have existing generative AI models or want to integrate them into your operations, we offer a comprehensive suite of services to unlock their full potential.

follow us