How to Enhance ChatGPT with Prompt Engineering

In November 2022, OpenAI used its GPT-3.5 language models to launch ChatGPT. Leading experts from around the world are taking notice of this astounding tool, which, less than a month after its introduction, already has more than one million users. On the internet, there are countless screenshots and user testimonials demonstrating how useful ChatGPT has been for everyone from professionals to university students, bloggers, and influencers.

ChatGPT is a powerful large language model (LLM) that can generate text in response to a wide variety of prompts. These models continue to generate better results over time, but it can be challenging to get exactly the result you want. To get the most out of ChatGPT, it is important to carefully consider the prompts you use when interacting with the model.

Improving your prompt engineering skills is the most efficient way to take advantage of the possibilities of these massive models. High-quality prompts can direct ChatGPT toward particular tasks or subjects and enhance the relevance and coherence of the model’s responses.

What is Prompt Engineering?

Prompt engineering is the process of crafting high-quality prompts to interact with a language model. The goal of prompt engineering is to provide the language model with the necessary context and information to generate relevant and coherent text in response to the given prompt.

The process of prompt engineering involves carefully considering the language, structure, and content of the prompt, in order to guide the language model towards the desired outcome. This means providing specific instructions, asking targeted questions, or providing additional context that the LLM can use to generate text that is tailored to the task at hand.

When utilizing language models for tasks like writing, translating, or chatbots, where the quality of the output is greatly influenced by the quality of the input prompt, effective prompt engineering can be critical. The accuracy, relevance, and coherence of the language model’s output, as well as the user experience, can be enhanced by developing high-quality prompts that are designed to elicit the desired response.

Tips for Enhancing ChatGPT

- Start with a clear objective: Using the prompt, decide what you want the chatbot to accomplish. This could be providing information, directing the conversation, or addressing an issue. If your inquiry is vague, your answer will be ambiguous. Instead of asking a general question like “What is the weather like today?”, try asking a more specific question like “What is the temperature in New York City right now?”

- Provide context: Large language models perform best when they have enough context to understand the task or topic at hand. When crafting a prompt, try to provide as much relevant context as possible. This could include things like previous messages in a chat, links to articles or other resources, or a summary of the topic you want to discuss.

- Use natural language: ChatGPT is designed to understand natural language, so try to phrase your prompts in a way that feels conversational. Avoid using overly technical language or jargon that the model may not be familiar with.

- Experiment with different styles: ChatGPT can generate text in a wide variety of styles, from formal to conversational, humorous to serious. Try experimenting with different styles of prompts to see how the model responds. A conversational prompt might result in a more casual and comfortable response, whereas a formal prompt might produce one that is more structured and precise. You may find that certain styles work better for certain tasks or topics.

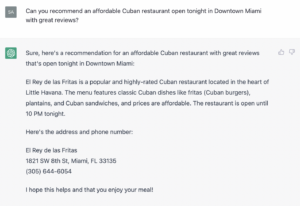

- Avoid open-ended questions: Do not pose questions with ambiguous or open-ended answers. This may cause the chatbot to respond in a way that is irrelevant or unclear. Here is an example of a vague prompt, versus an engineered prompt:

A prompt such as “Where should I go for dinner?” is too ambiguous and the model cannot give a useful recommendation without more context.

A specific prompt such as “Can you recommend an affordable Cuban restaurant open tonight in Downtown Miami with great reviews?” has much more detail and enables the model to recommend a restaurant with an address, phone number, and highlights from the menu.

It is important to note that no technology, especially at this scale, comes without risks and challenges. In many ways, LLMs are a game-changer, and this incredible technology is now being widely used without many limitations or controls. There are several potential pitfalls, including bias and inaccuracy.

Prompt engineering is a powerful tool for getting the most out of large language models such as ChatGPT. By carefully crafting your prompts, you can improve the relevance and coherence of ChatGPT’s responses and guide the model towards specific tasks or topics. With some experimentation and practice, you can become an expert at using prompt engineering to tap into the staggering capabilities of large language models.

Accelerate AI with Annotated Data

Check Out this Article on Why Your Model Performance Problems Are Likely in the Data

follow us